从零搭建Linux+Docker+Ansible+kubernetes 学习环境(1*Master+3*Node)

我所渴求的,無非是將心中脫穎語出的本性付諸生活,為何竟如此艱難呢 ——《彷徨少年時》

写在前面

- 一直想学K8s,但是没有环境,本身

K8s就有些重。上学之前租了一个阿里云的ESC,单核2G的,单机版K8s的勉强可以装上去,多节点没法搞,书里的Demo也没法学。需要多个节点,涉及到多机器操作,所以顺便温习一下ansible。 - 这是一个在

Win10上从零搭建学习环境的教程,包含:- 通过

Vmware Workstation安装四个linux系统虚拟机,一个Master管理节点,三个Node计算节点。 - 通过桥接模式,可以

访问外网,并且可以通过win10物理机ssh远程访问。 - 可以通过

Master节点机器ssh免密登录任意Node节点机。 - 配置

Ansible,Master节点做controller节点,使用角色配置时间同步,使用playbook安装配置docker K8S等。 Docker,K8s集群相关包安装,网络配置等,k8s我们使用二进制的方式安装,不使用kubeadm。

- 通过

- 关于

Vmware Workstation 和 Linux ios包,默认小伙伴已经拥有。Vmware Workstation默认小伙伴已经安装好,没有的可以网上下载一下。

我所渴求的,無非是將心中脫穎語出的本性付諸生活,為何竟如此艱難呢 ——《彷徨少年時》

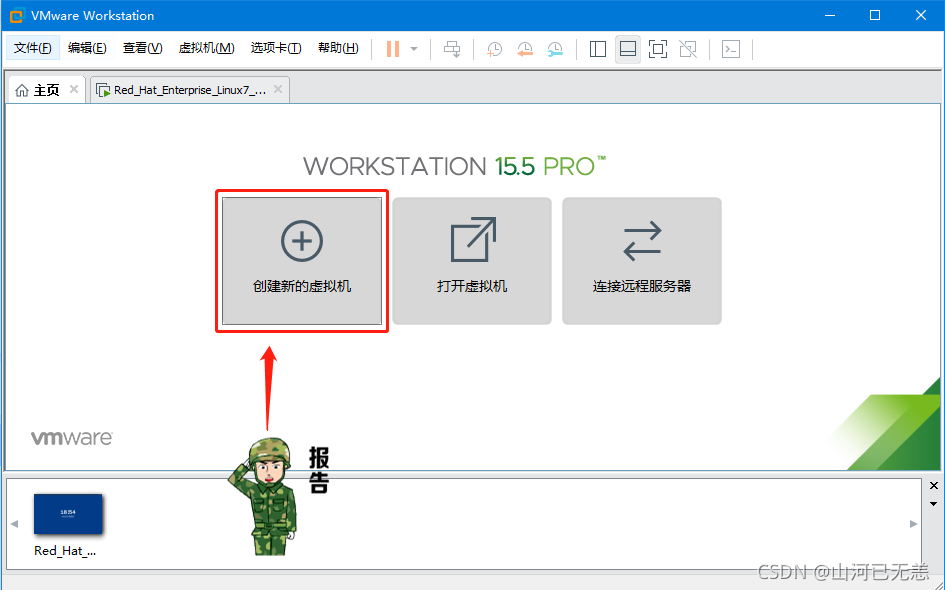

一,Linux 系统安装

这里默认小伙伴已经安装了Vmware Workstation(VMware-workstation-full-15.5.6-16341506.exe),已经准备了linux系统 安装光盘(CentOS-7-x86_64-DVD-1810.iso)。括号内是我用的版本,我们的方式:

先安装一个Node节点机器,然后通过克隆的方式得到剩余的两个Node机器和一个Master机器

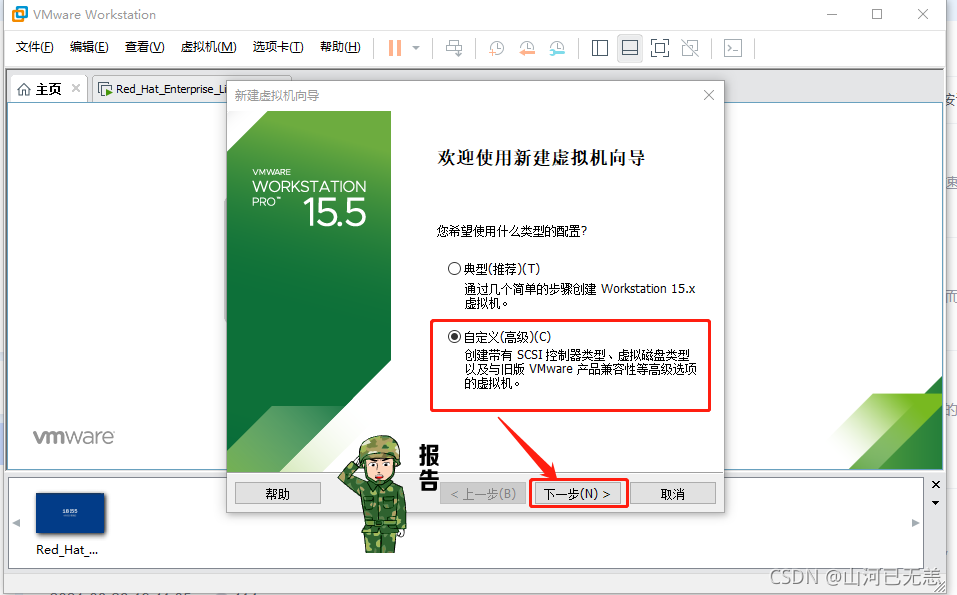

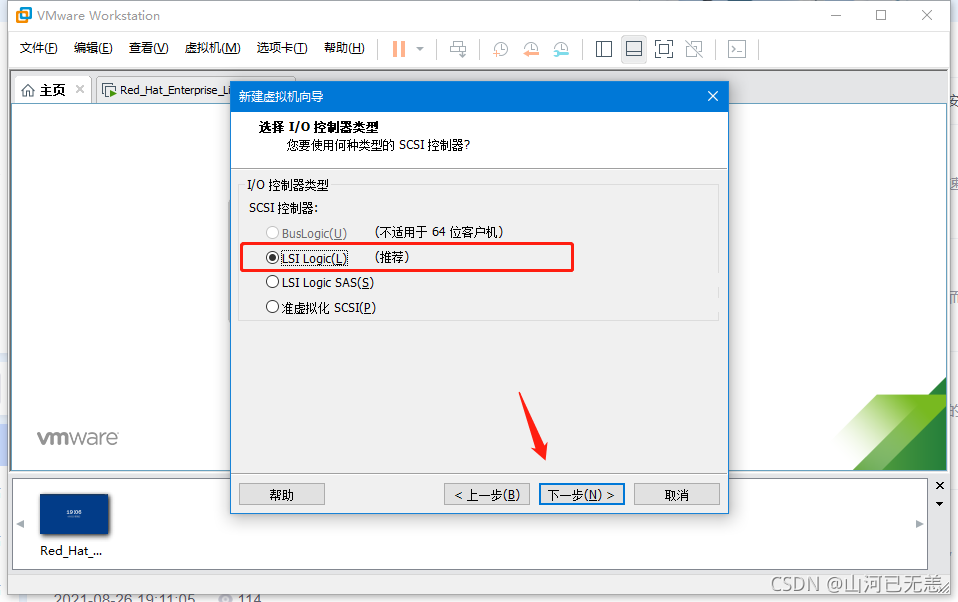

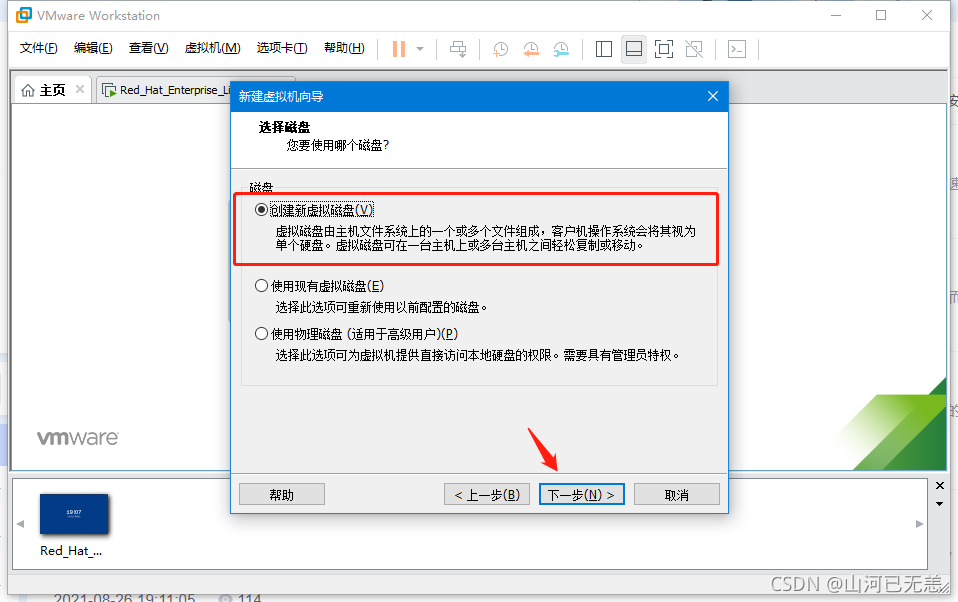

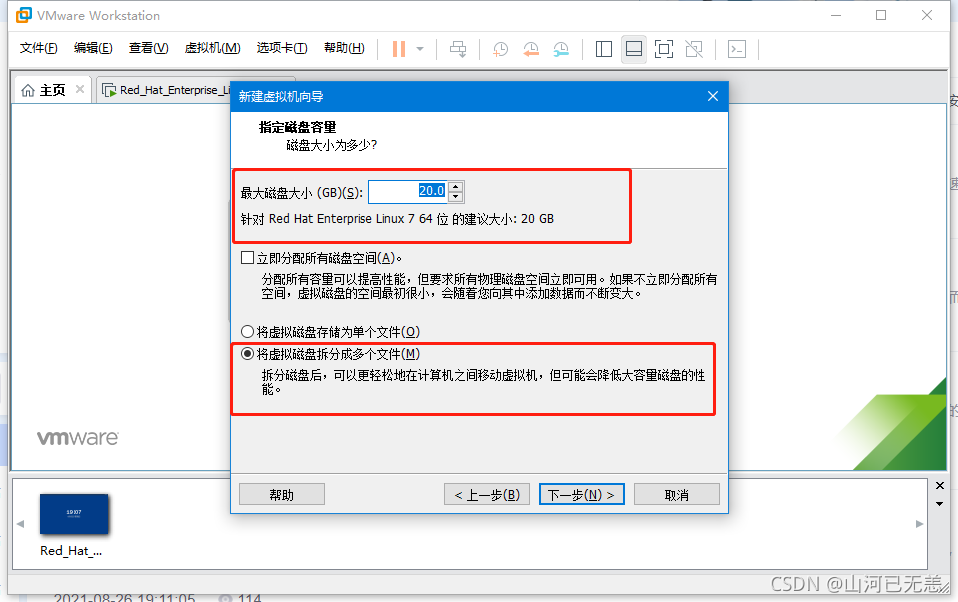

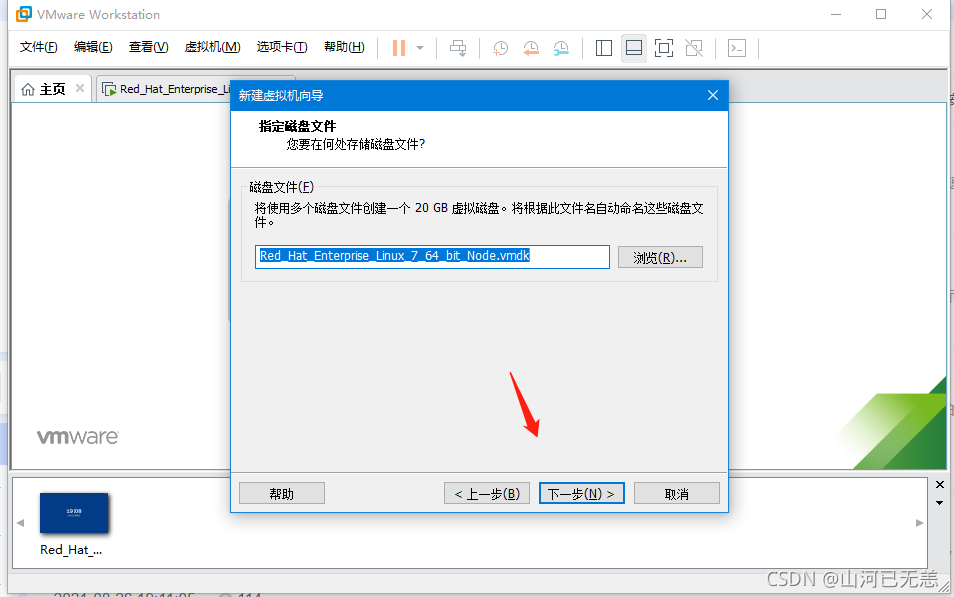

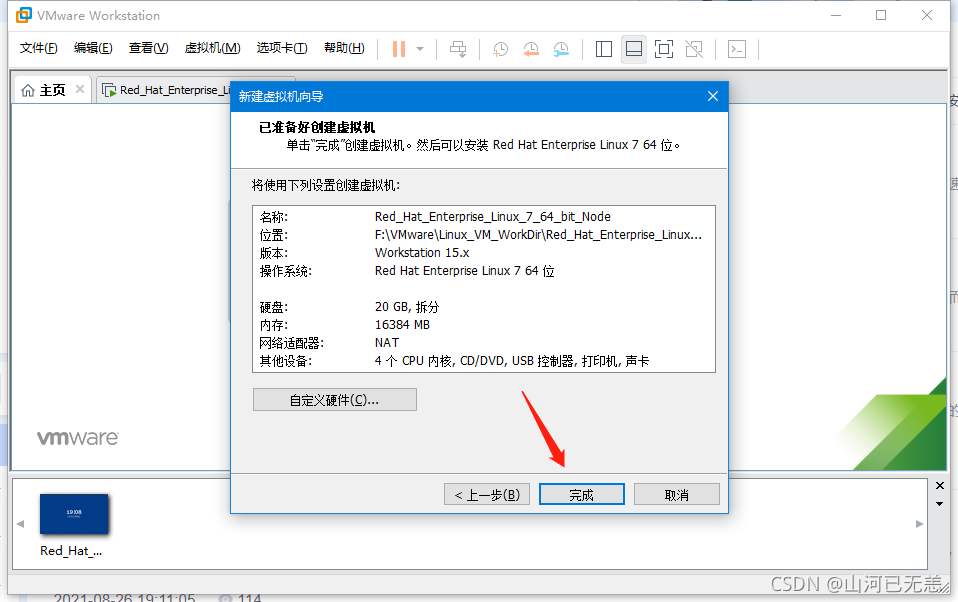

1. 系统安装

| &&&&&&&&&&&&&&&&&&安装步骤&&&&&&&&&&&&&&&&&& |

|---|

|

|

|

|

|

| 给虚拟机起一个名称,并指定虚拟机存放的位置。 |

|

|

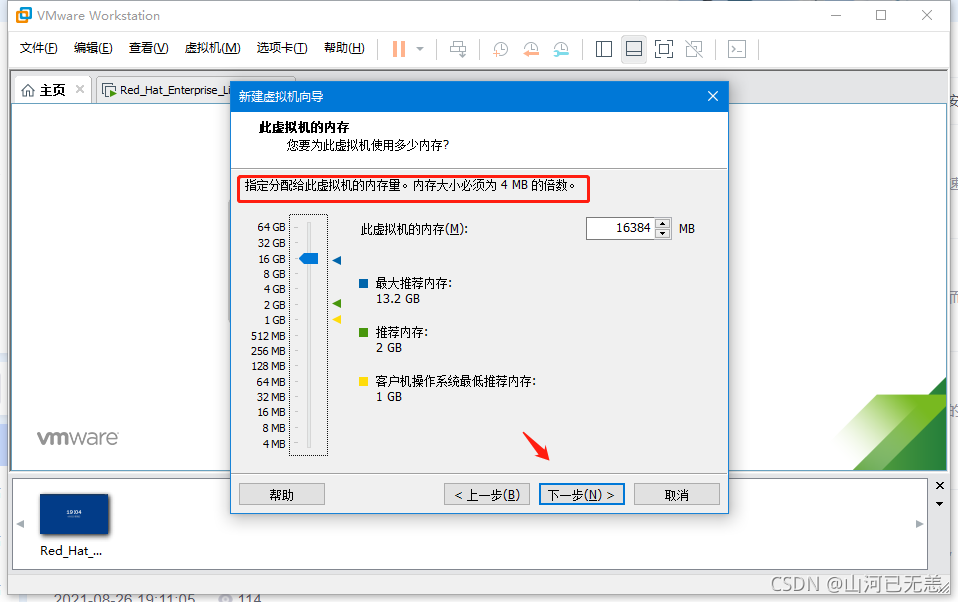

| 内存设置这里要结合自己机器的情况,如果8G内存,建议为2G,如果16G,建议4G,如果32G,建议8G |

|

|

|

|

|

|

|

|

|

| 将存放在系统中的光盘镜像放入光驱中。【通过”浏览”找到即可】 |

|

|

| 如果显示内存太大了,开不了机,可以适当减小内存, |

|

| 点击屏幕,光标进入到系统,然后上下键选择第一个。 |

|

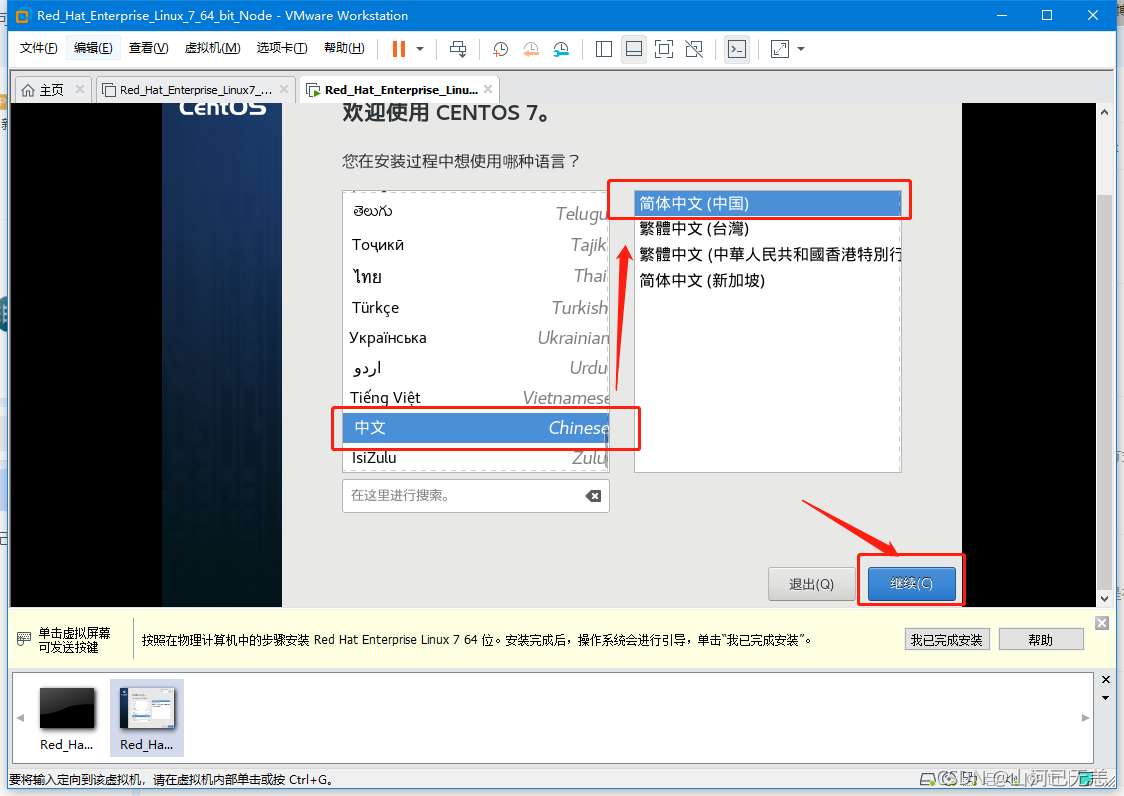

| 建议初学者选择“简体中文(中国)”,单击“继续”。 |

|

|

|

|

|

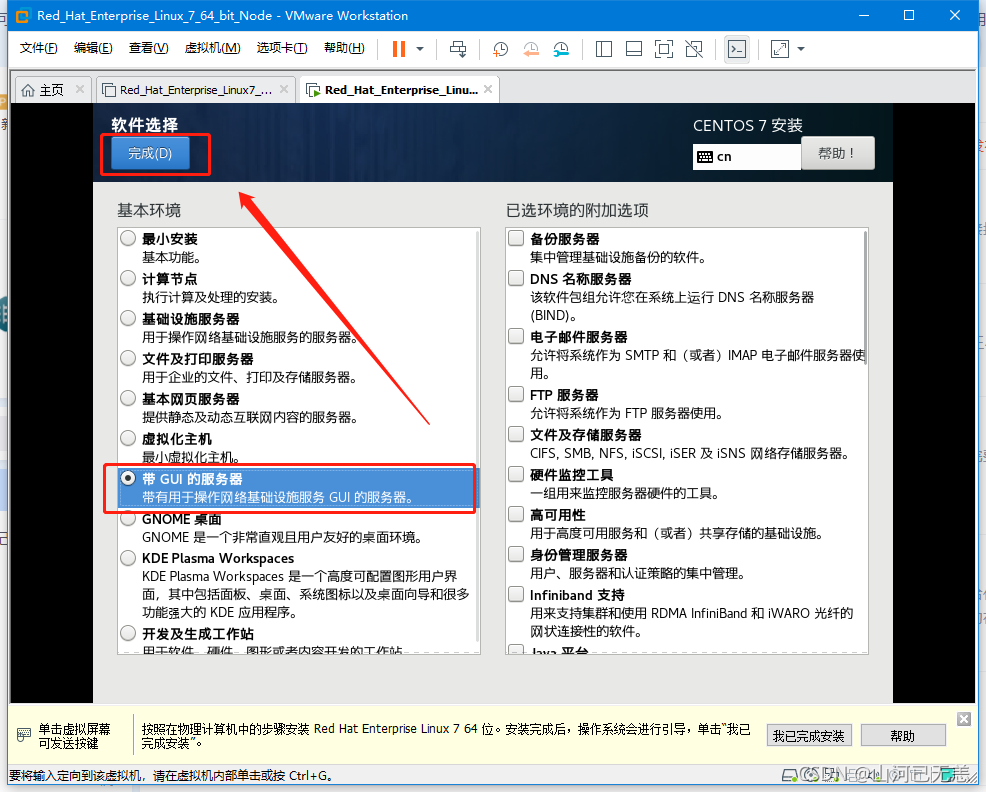

| 检查“安装信息摘要界面”,确保所有带叹号的部分都已经完成,然后单击右下方的“开始安装”按钮,将会执行正式安装。 |

|

|

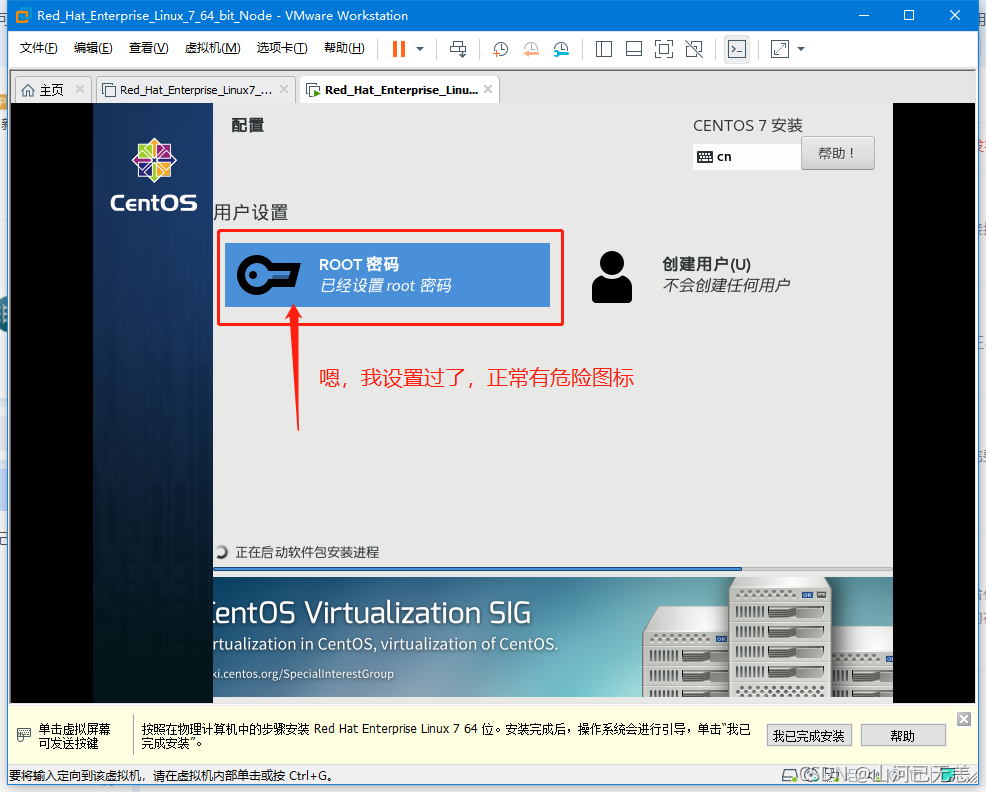

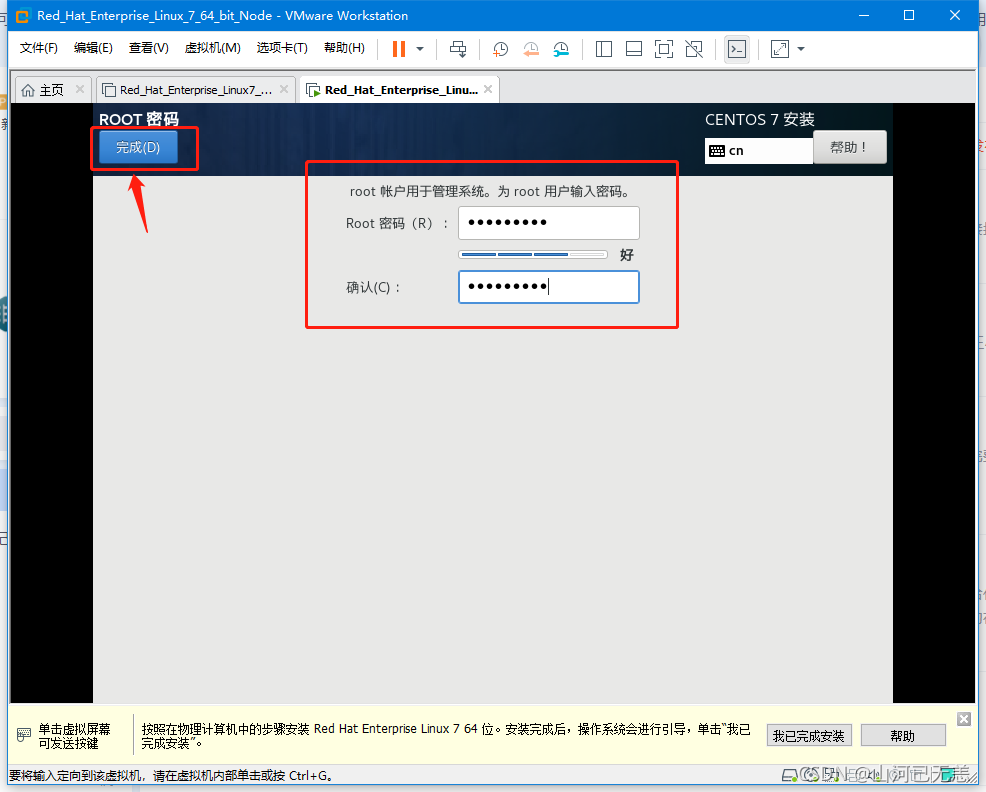

| 若密码太简单需要按两次“完成”按钮! |

|

|

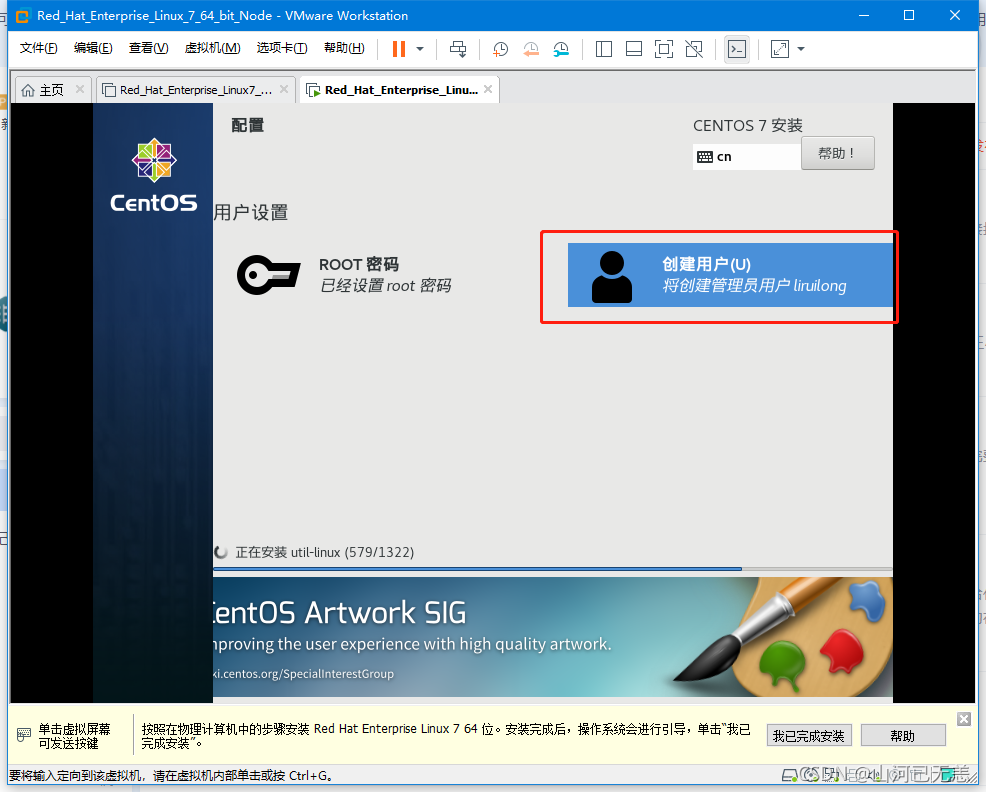

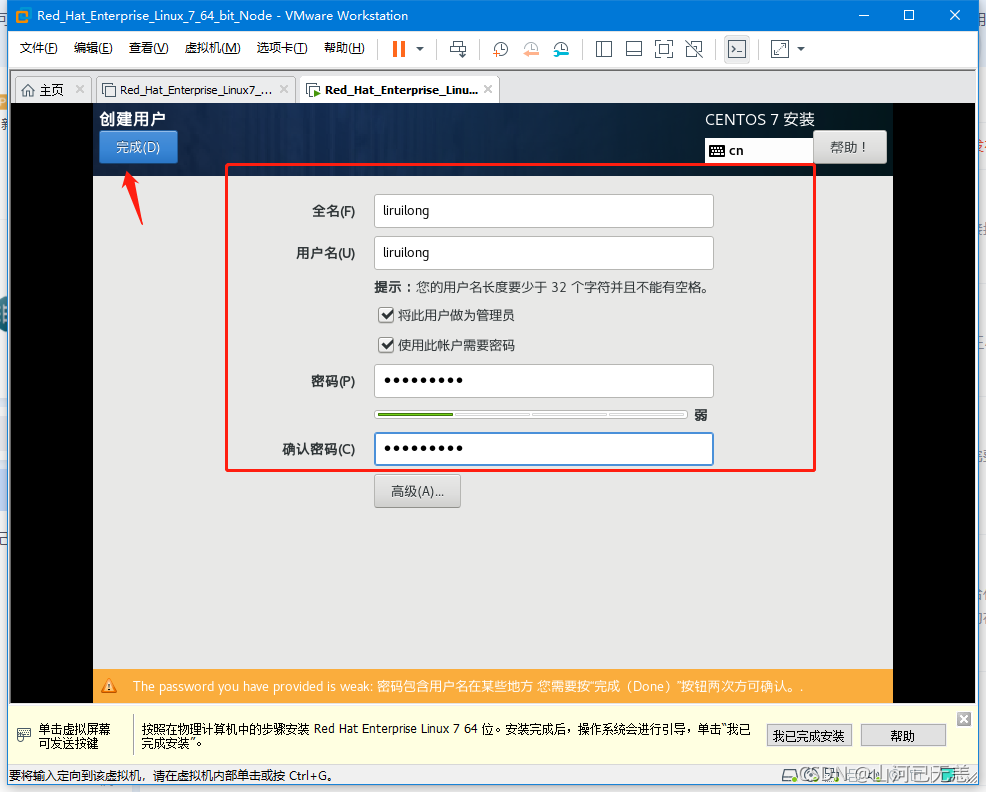

| 创建用户。(用户名字和密码自定义),填写完成后,单击两次“完成”。 |

|

|

| 这很需要时间,可以干点别的事………,安装完成之后,会有 重启 按钮,直接重启即可 |

|

|

|

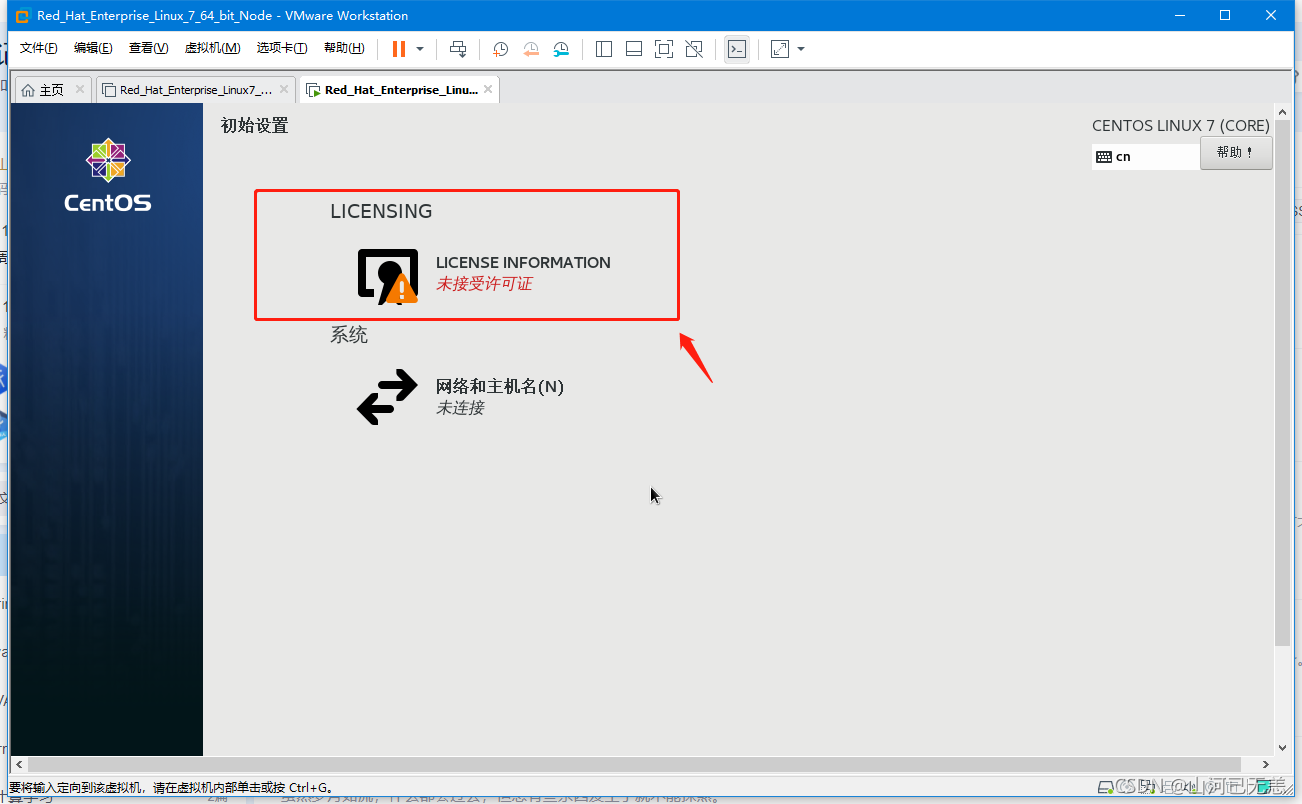

| 启动系统,这个需要一些时间,耐心等待 |

|

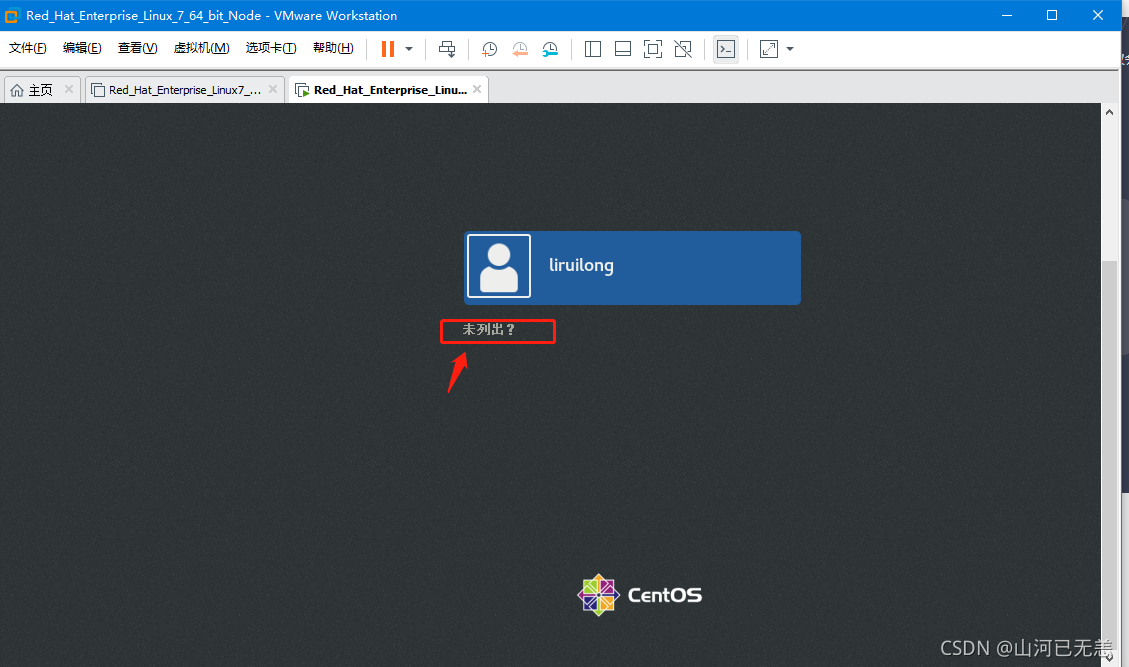

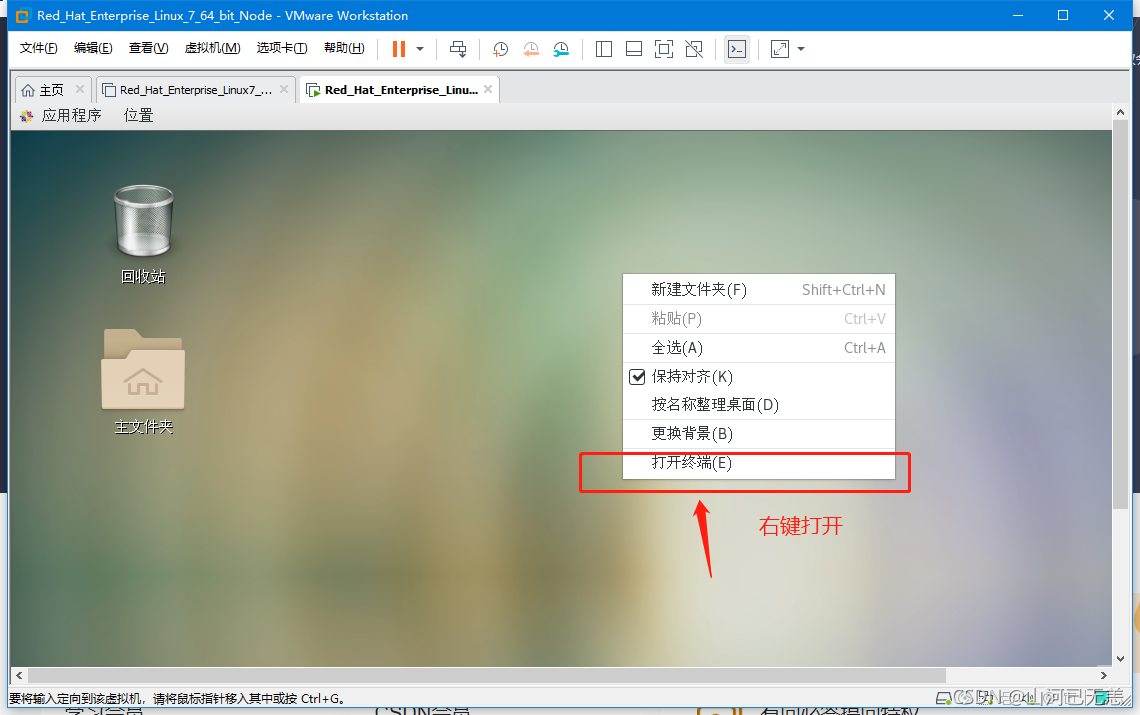

| 未列出以root用户登录,然后是一些引导页,直接下一步即可 |

|

|

嗯,这里改一下,**命令提示符**。弄的好看一点想学习,直接输入:PS1="\[\033[1;32m\]┌──[\[\033[1;34m\]\u@\H\[\033[1;32m\]]-[\[\033[0;1m\]\w\[\033[1;32m\]] \n\[\033[1;32m\]└─\[\033[1;34m\]\$\[\033[0m\]"或者写到 vi ~/.bashrc |

|

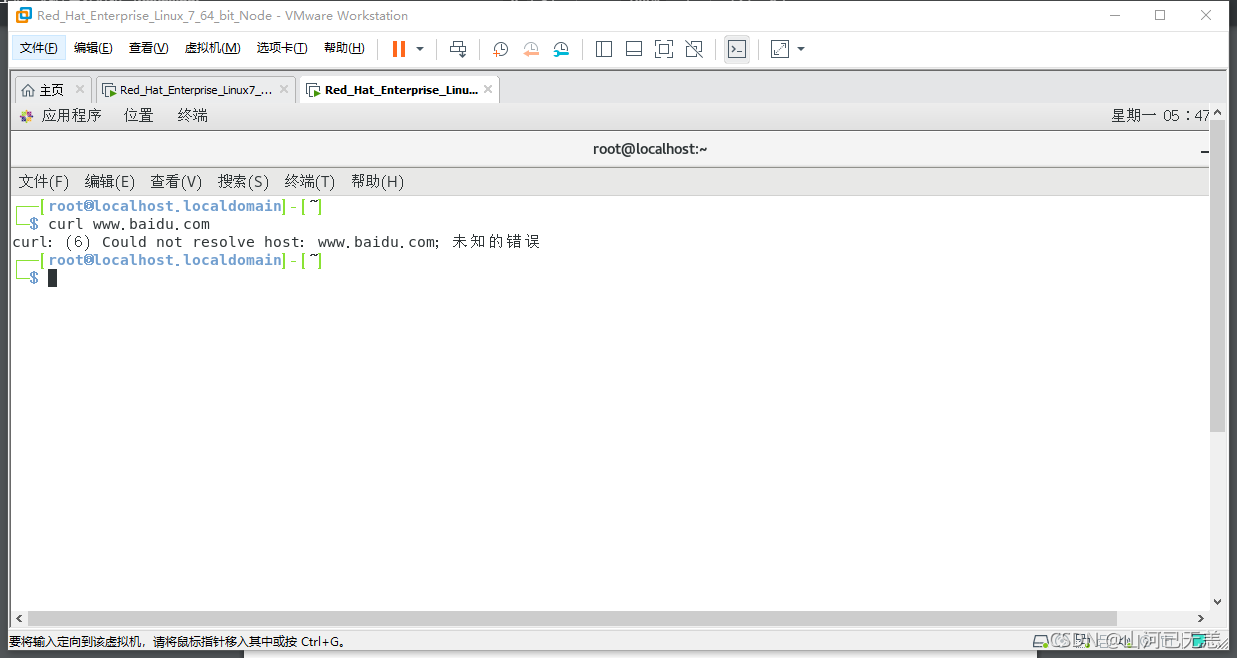

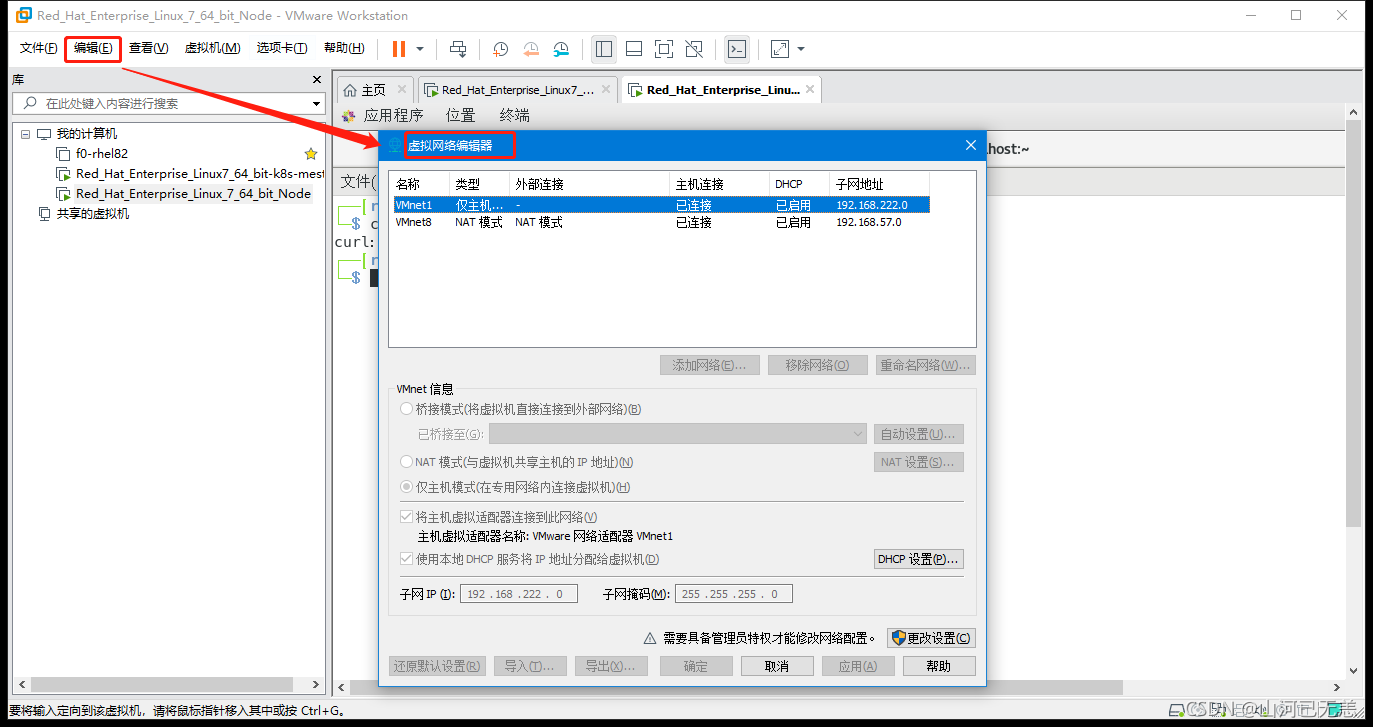

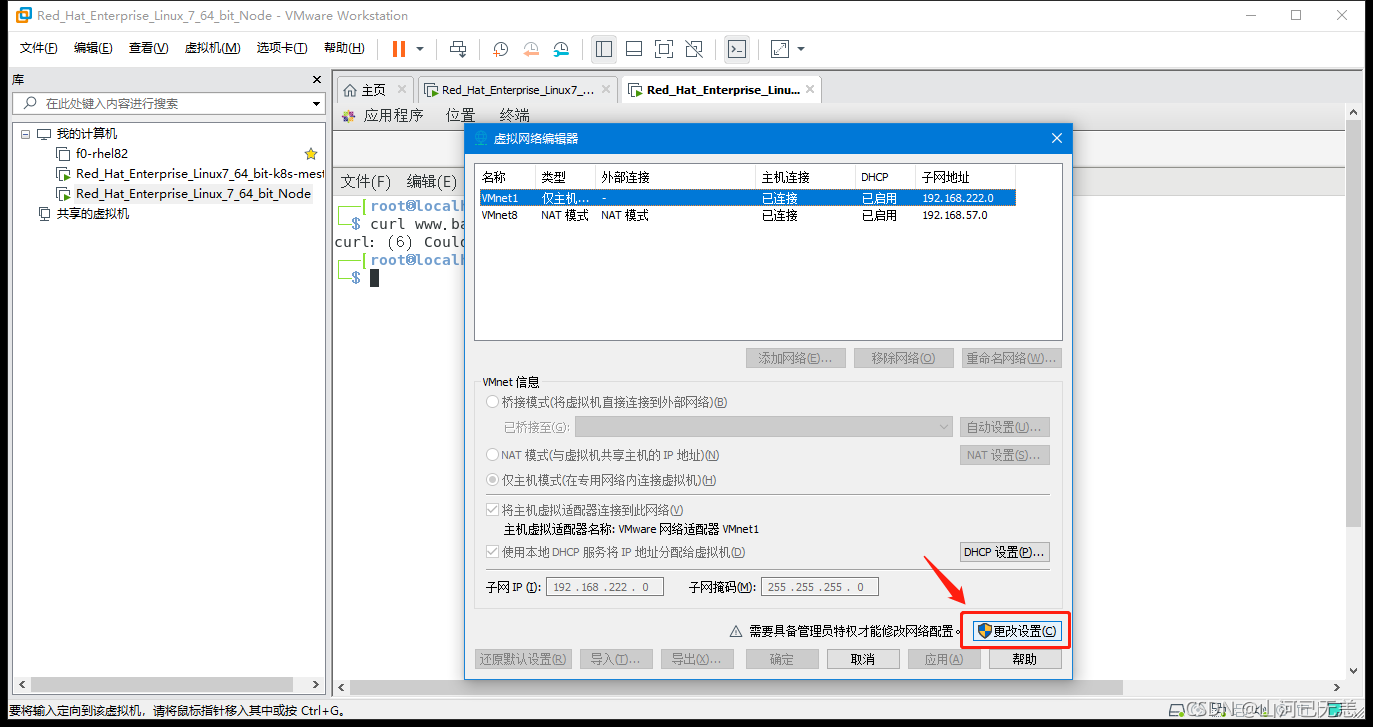

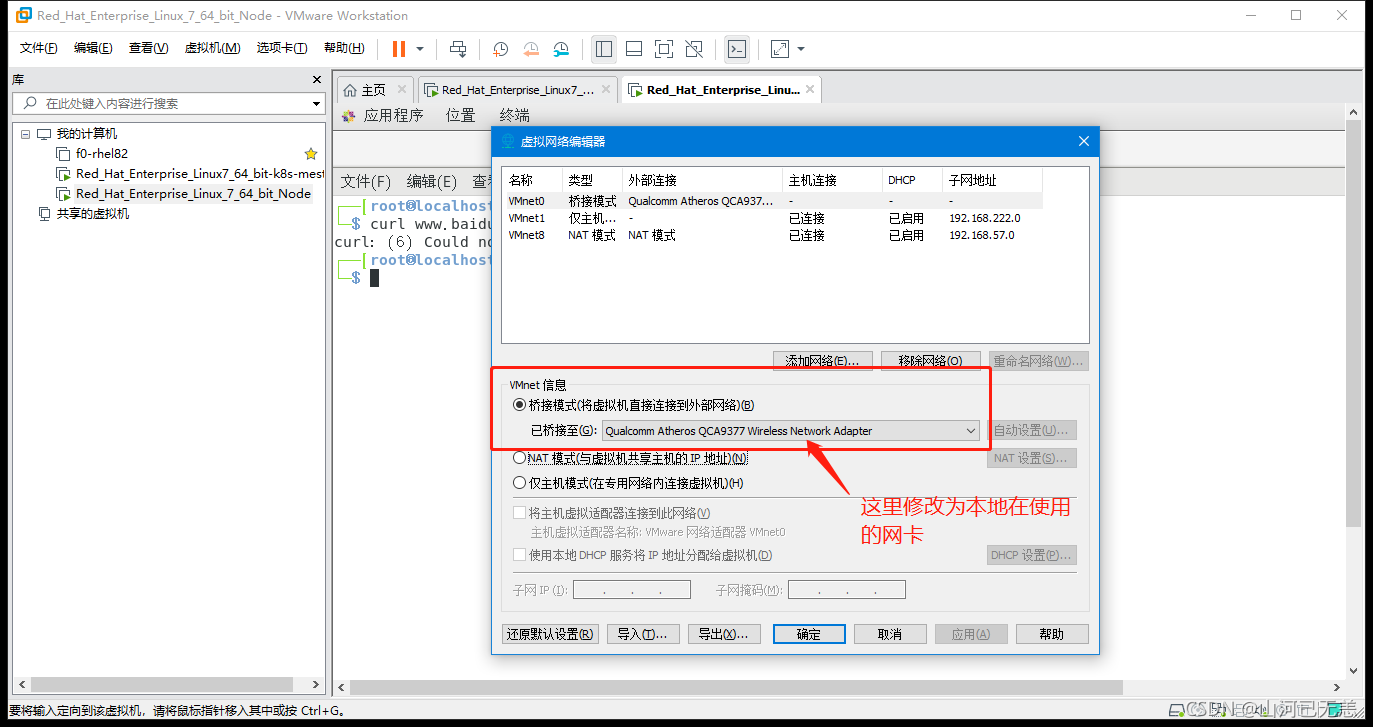

2. 配置网络

| &&&&&&&&&&&&&&&&&&配置网络步骤&&&&&&&&&&&&&&&&&& |

|---|

|

|

|

|

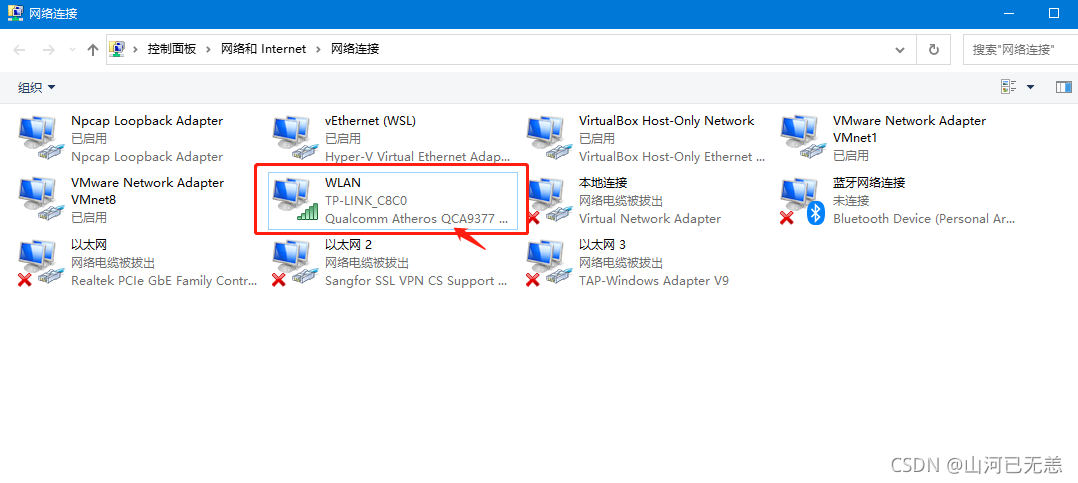

| 桥接模式下,要自己选择桥接到哪个网卡(实际联网用的网卡),然后确认 |

|

|

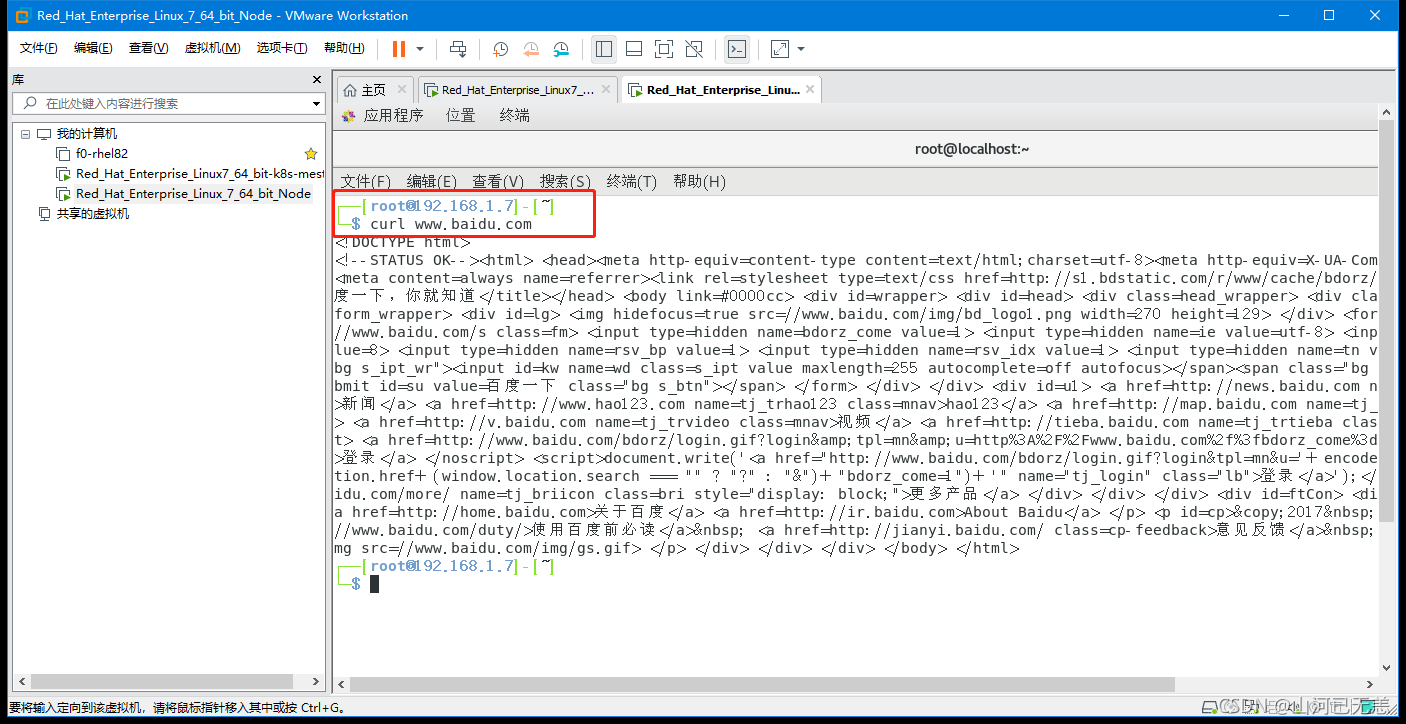

| 配置网卡为DHCP模式(自动分配IP地址):执行方式见表尾,这里值得一说的是,如果网络换了,那么所以有的节点ip也会换掉,因为是动态的,但是还是在一个网段内。DNS和SSH免密也都不能用了,需要重新配置,但是如果你只连一个网络,那就没影响。 |

nmcli connection modify 'ens33' ipv4.method auto connection.autoconnect yes #将网卡改为DHCP模式(动态分配IP),nmcli connection up 'ens33' |

|

|

配置网卡为DHCP模式(自动分配IP地址)

1 |

|

1 | ┌──[root@192.168.1.7]-[~] |

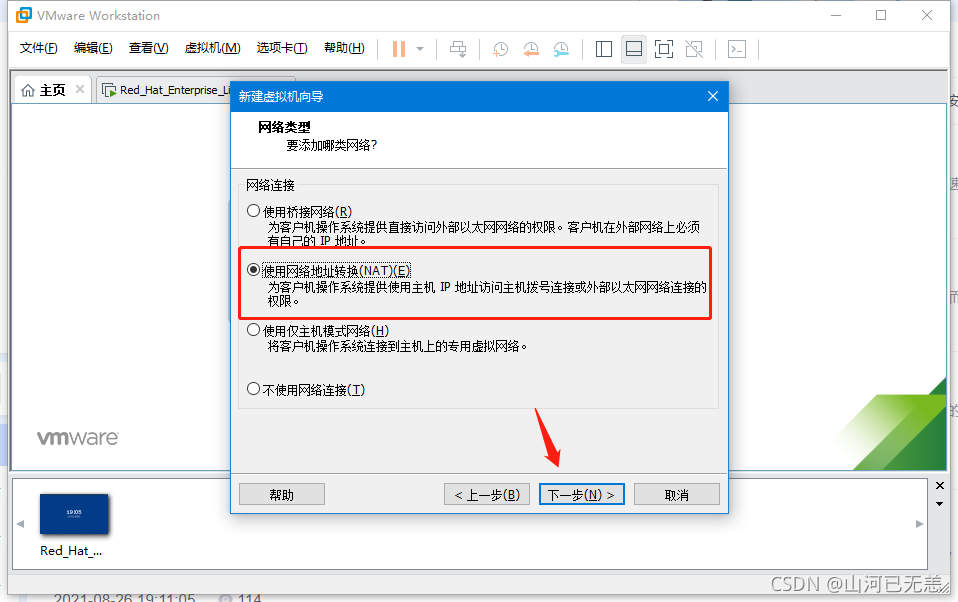

**网络配置这里,如果觉得不是特别方面,可以使用NAT模式,即通过vm1或者vmm8 做虚拟交换机来使用,这样就不用考虑ip问题了**。

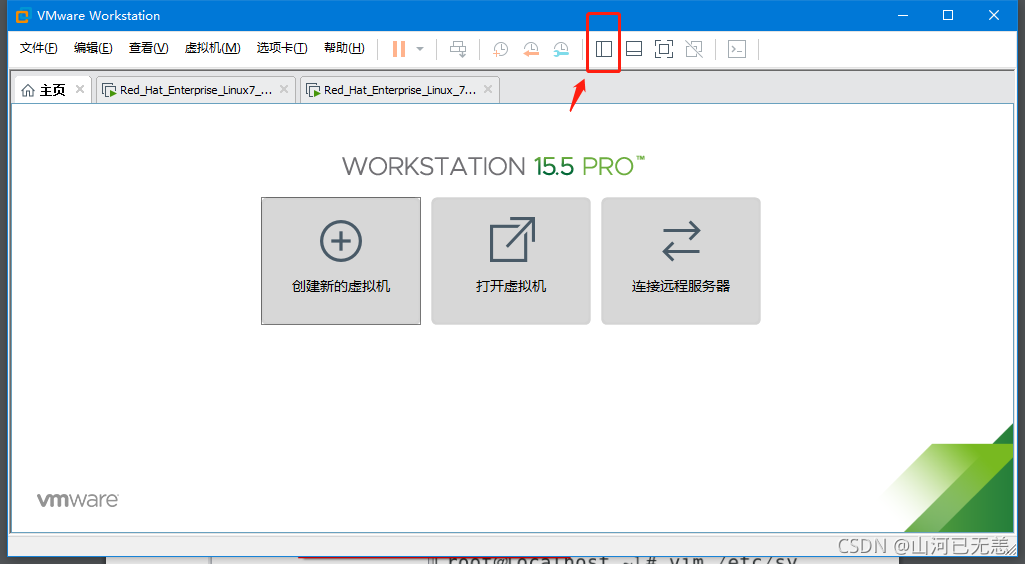

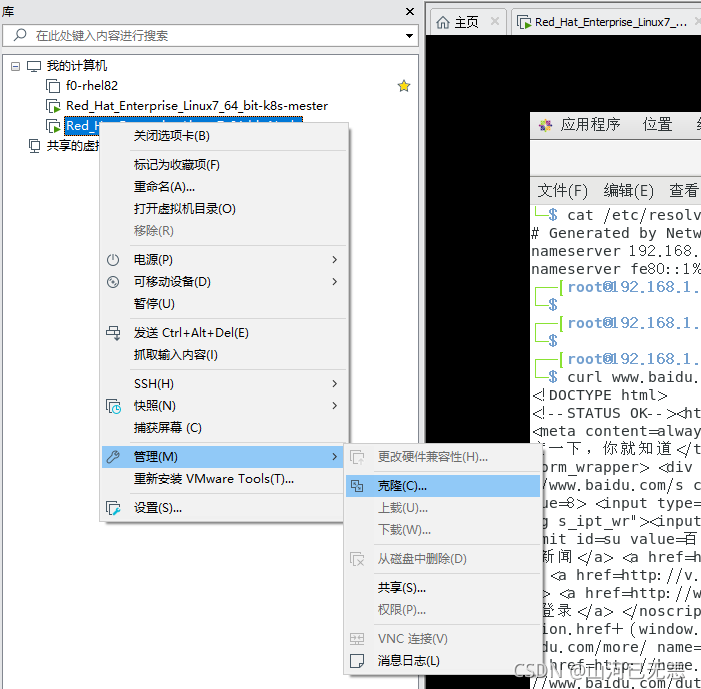

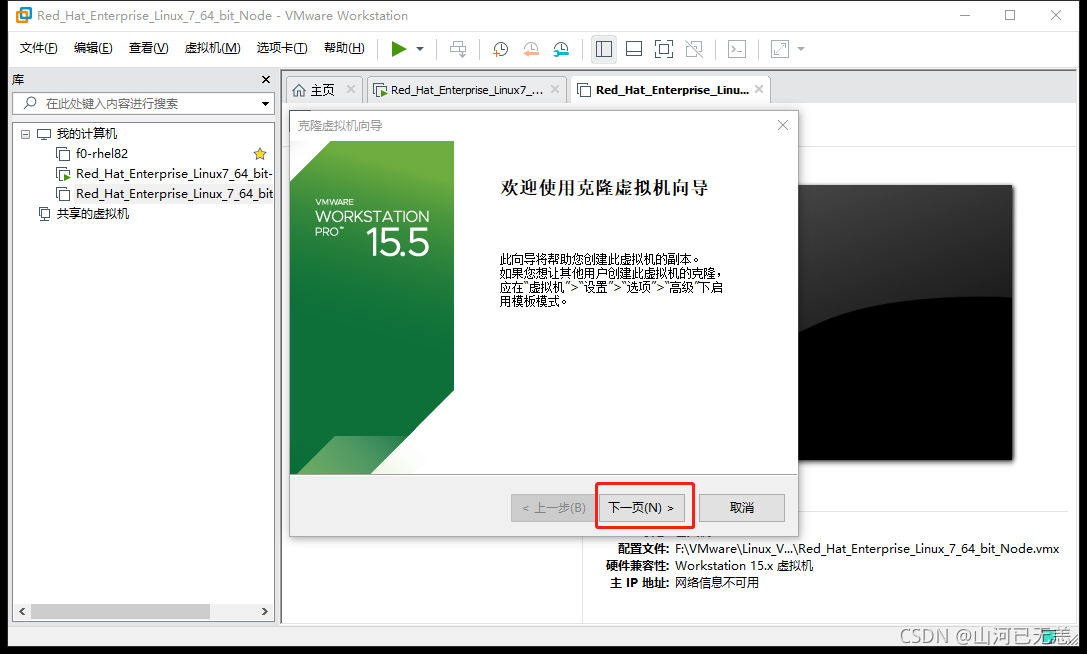

3. 机器克隆

| &&&&&&&&&&&&&&&&&&机器克隆步骤&&&&&&&&&&&&&&&&&& |

|---|

| 关闭要克隆的虚拟机 |

|

|

|

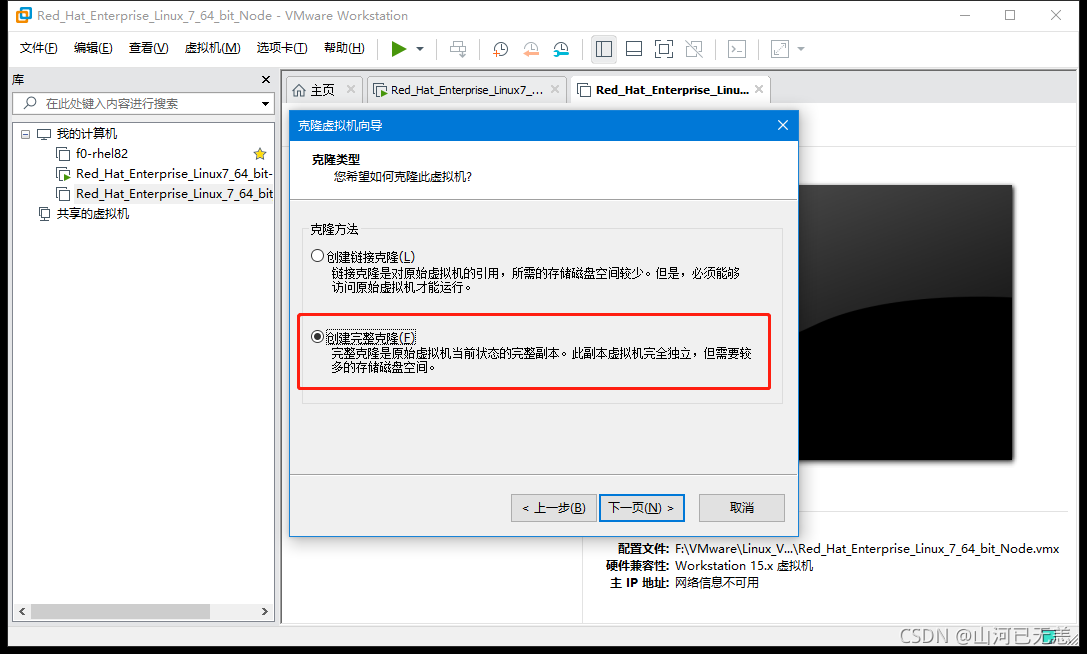

| 链接克隆和完整克隆的区别: |

| 创建链接克隆 #克隆的虚拟机占用磁盘空间很少,但是被克隆的虚拟机必须能够正常使用,否则无法正常使用; |

| 创建完整克隆 #新克隆的虚拟机跟被克隆的虚拟机之间没有关联,被克隆的虚拟机删除也不影响新克隆出来的虚拟机的使用 |

|

|

|

|

|

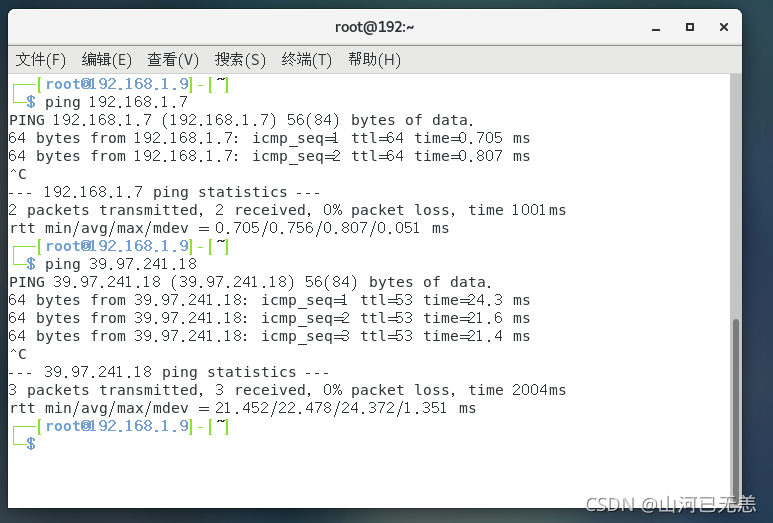

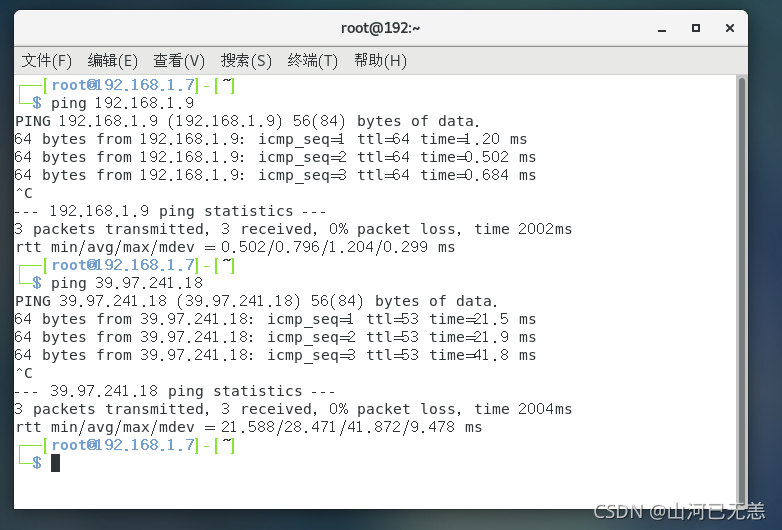

| 测试一下,可以访问外网(39.97.241是我的阿里云公网IP), 也可以和物理机互通,同时也可以和node互通 |

|

|

|

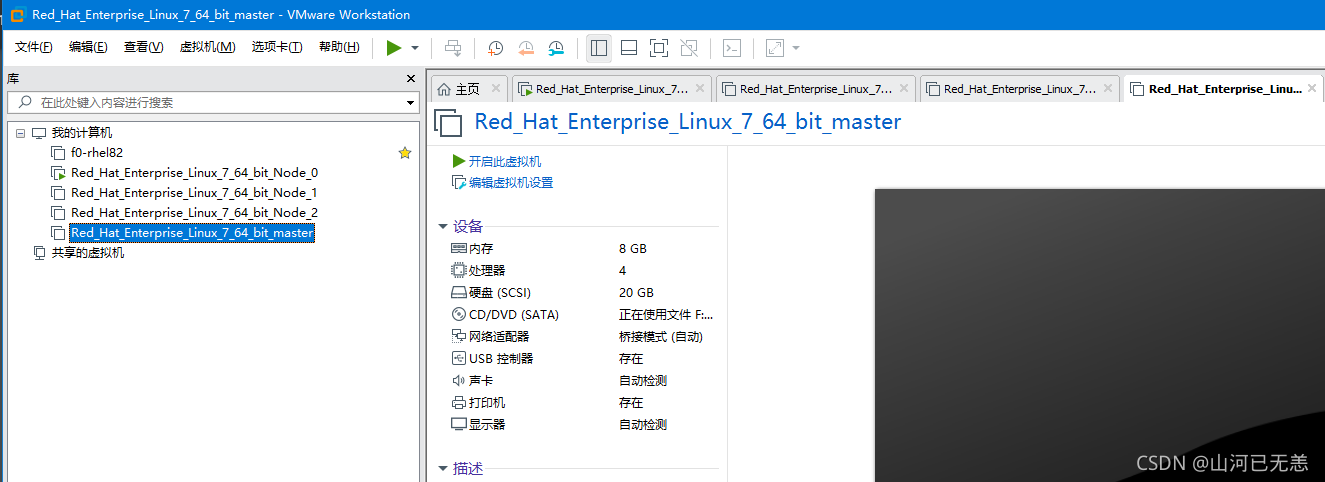

| 我们以相同的方式,克隆剩余的一个node节点机器,和一个Master节点机。 |

我们以相同的方式,克隆剩余的一个

node节点机器,和一个Master节点机。这里不做展示

| 克隆剩余的,如果启动时内存不够,需要关闭虚拟机调整相应的内存 |

|---|

|

nmcli connection modify 'ens33' ipv4.method manual ipv4.addresses 192.168.1.9/24 ipv4.gateway 192.168.1.1 connection.autoconnect yes , nmcli connection up 'ens33' 记得配置静态IP呀 |

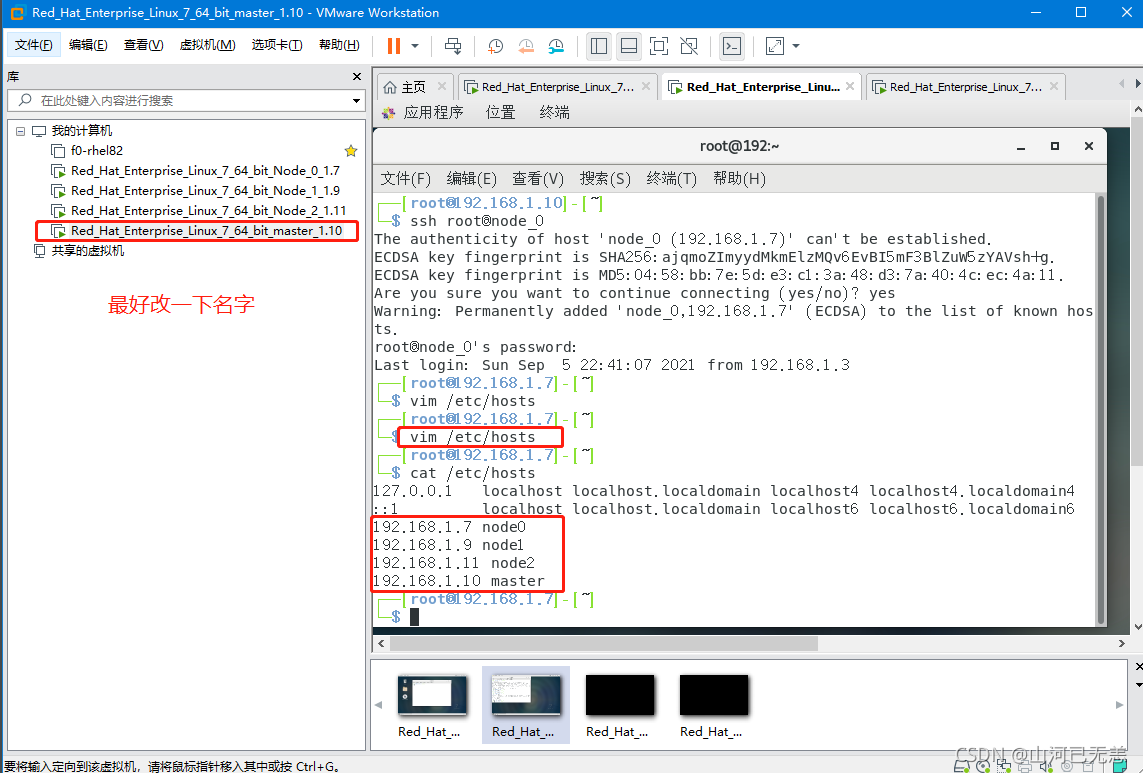

4.管理控制节点到计算节点DNS配置

| Master节点DNS配置 |

|---|

Master节点配置DNS,可用通过主机名访问,为方便的话,可以修改每个节点机器的 主机名 /etc/hosts下修改。 |

|

1 | ┌──[root@192.168.1.10]-[~] |

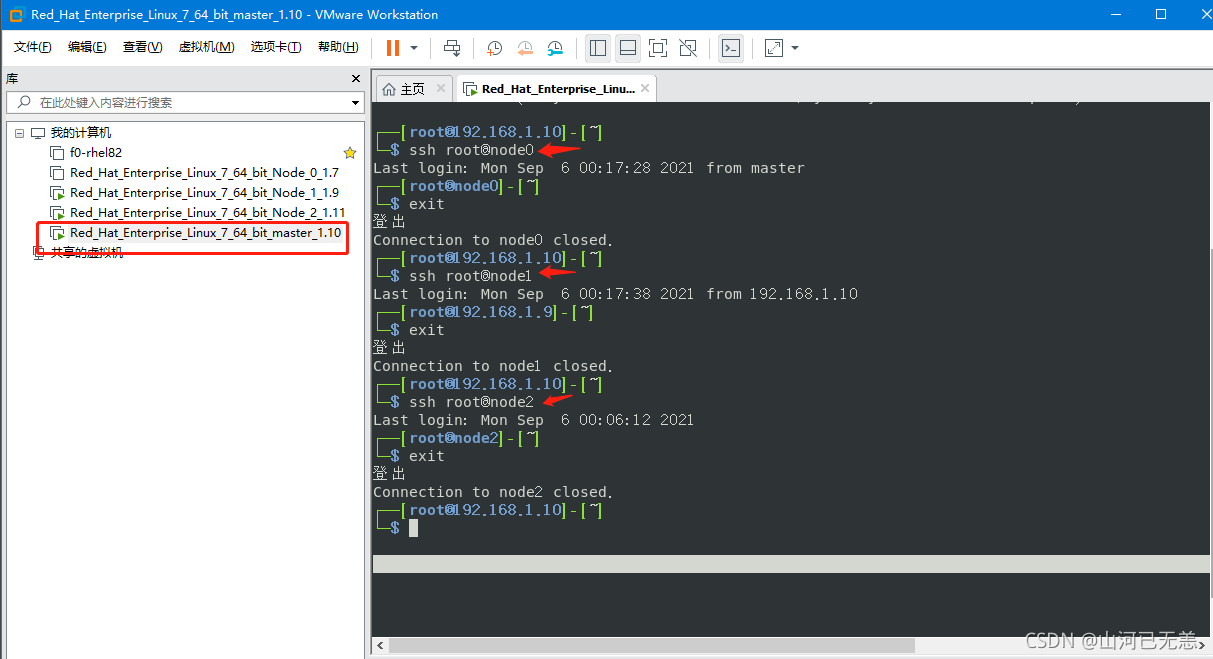

5.管理控制节点到计算节点SSH免密配置

| Master节点配置SSH免密登录 |

|---|

ssh-keygen生成密匙,全部回车 |

SSH 免密配置,使用ssh-copy-id传递密匙 |

|

| 免密测试,如果为了方便,这里,Node1的主机名没有修改。所以显示为IP地址 |

|

ssh-keygen生成密匙,全部回车

1 | ┌──[root@192.168.1.10]-[~] |

SSH 免密配置,使用ssh-copy-id传递密匙

1 | ssh-copy-id root@node0 |

免密测试

1 | ssh root@node0 |

到这一步,我们已经做好了linux环境的搭建,想学linux的小伙伴就可以从这里开始学习啦。这是我linux学习一路整理的笔记,有些实战,感兴趣小伙伴可以看看

二,Ansible安装配置

这里为了方便,我们直接在物理机操作,而且我们已经配置了ssh,因为我本机的内存不够,所以我只能启三台机器了。

| 主机名 | IP | 角色 | 备注 |

|---|---|---|---|

| master | 192.168.1.10 | conteoller | 控制机 |

| node1 | 192.168.1.9 | node | 受管机 |

| node2 | 192.168.1.11 | node | 受管机 |

1. SSH到控制节点即192.168.1.10,配置yum源,安装ansible

1 | ┌──(liruilong㉿Liruilong)-[/mnt/e/docker] |

查找 ansible安装包

1 | ┌──[root@master]-[/etc/yum.repos.d] |

阿里云的yum镜像没有ansible包,所以我们需要使用epel安装

1 | ┌──[root@master]-[/etc/yum.repos.d] |

查找ansible安装包,并安装

1 | ┌──[root@master]-[/etc/yum.repos.d] |

1 | ┌──[root@master]-[/etc/yum.repos.d] |

查看主机清单

1 | ┌──[root@master]-[/etc/yum.repos.d] |

2. ansible环境配置

我们这里使用liruilong这个普通账号,一开始装机配置的那个用户,生产中会配置特定的用户,不使用root用户;

1. 主配置文件 ansible.cfg 编写

1 | ┌──[root@master]-[/home/liruilong] |

2. 主机清单:

被控机列表,可以是 域名,IP,分组([组名]),聚合([组名:children]),也可以主动的设置用户名密码

1 | [liruilong@master ansible]$ vim inventory |

3. 配置liruilong用户的ssh免密

master节点上以liruilong用户对三个节点分布配置

1 | [liruilong@master ansible]$ ssh-keygen |

1 | [liruilong@master ansible]$ ssh-copy-id node1 |

嗯 ,node2和mater也需要配置

1 | [liruilong@master ansible]$ ssh-copy-id node2 |

4. 配置liruilong普通用户提权

这里有个问题,我的机器上配置了sudo免密,但是第一次没有生效,需要输入密码,之后就不需要了,使用ansible还是不行。后来发现,在/etc/sudoers.d 下新建一个以普通用户命名的文件的授权就可以了,不知道啥原因了。

node1

1 | ┌──[root@node1]-[~] |

1 | ┌──[root@node2]-[~] |

node2 和 master 按照相同的方式设置

5. 测试临时命令

ansible 清单主机地址列表 -m 模块名 [-a '任务参数']

1 | [liruilong@master ansible]$ ansible all -m ping |

嗯,到这一步,

ansible我们就配置完成了,可以在当前环境学习ansible。这是我ansible学习整理的笔记,主要是CHRE考试的笔记,有些实战,感兴趣小伙伴可以看看

三,Docker、K8s相关包安装配置

关于docker以及k8s的安装,我们可以通过rhel-system-roles基于角色进行安装,也可以自定义角色进行安装,也可以直接写剧本进行安装,这里我们使用直接部署ansible剧本的方式,一步一步构建。docker的话,感兴趣的小伙伴可以看看我的笔记。容器化技术学习笔记 我们主要看看K8S,

1. 使用ansible部署Docker

这里部署的话,一种是直接刷大佬写好的脚本,一种是自己一步一步来,这里我们使用第二种方式。

我们现在有的机器

| 主机名 | IP | 角色 | 备注 |

|---|---|---|---|

| master | 192.168.1.10 | kube-master | 管理节点 |

| node1 | 192.168.1.9 | kube-node | 计算节点 |

| node2 | 192.168.1.11 | kube-node | 计算节点 |

1. 配置节点机yum源

这里因为我们要用节点机装包,所以需要配置yum源,ansible配置的方式有很多,可以通过yum_repository配置,我们这里为了方便,直接使用执行shell的方式。

1 | [liruilong@master ansible]$ ansible nodes -m shell -a 'mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup;wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo' |

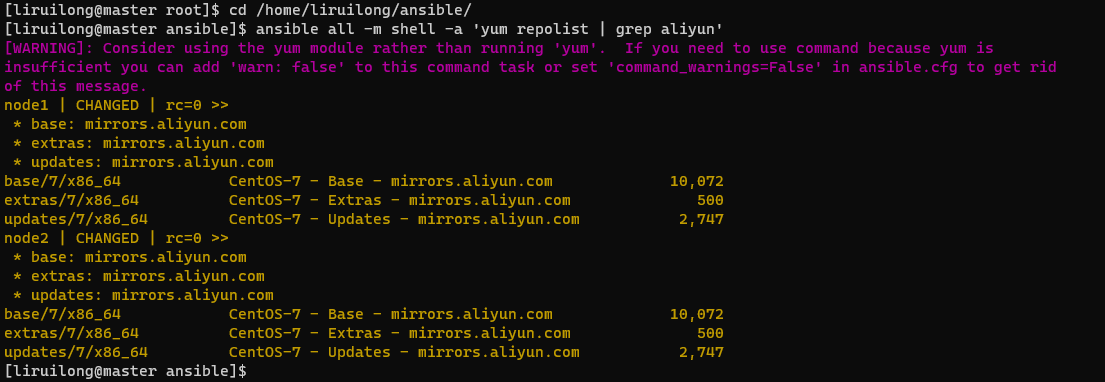

配置好了yum源,我们需要确认一下

1 | [liruilong@master ansible]$ ansible all -m shell -a 'yum repolist | grep aliyun' |

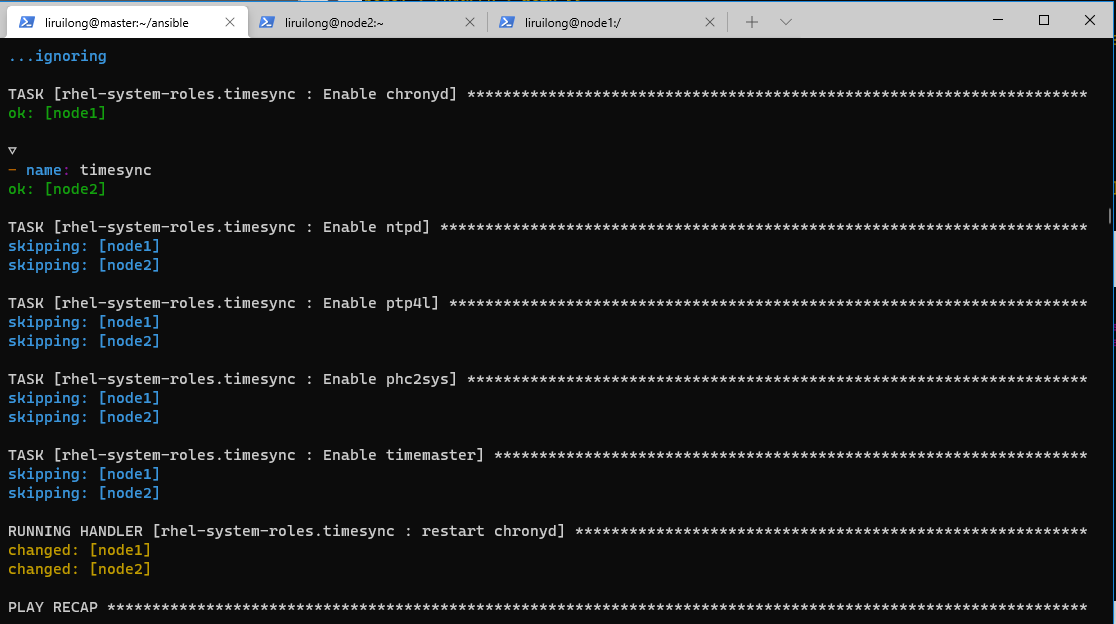

2. 配置时间同步

这里为了方便。我们直接使用 ansible角色 安装RHEL角色软件包,拷贝角色目录到角色目录下,并创建剧本 timesync.yml

1 | ┌──[root@master]-[/home/liruilong/ansible] |

1 |

|

|

3. docker环境初始化

| 步骤 |

|---|

| 安装docker |

| 卸载防火墙 |

| 开启路由转发 |

| 修复版本防火墙BUG |

| 重启docker服务,设置开机自启 |

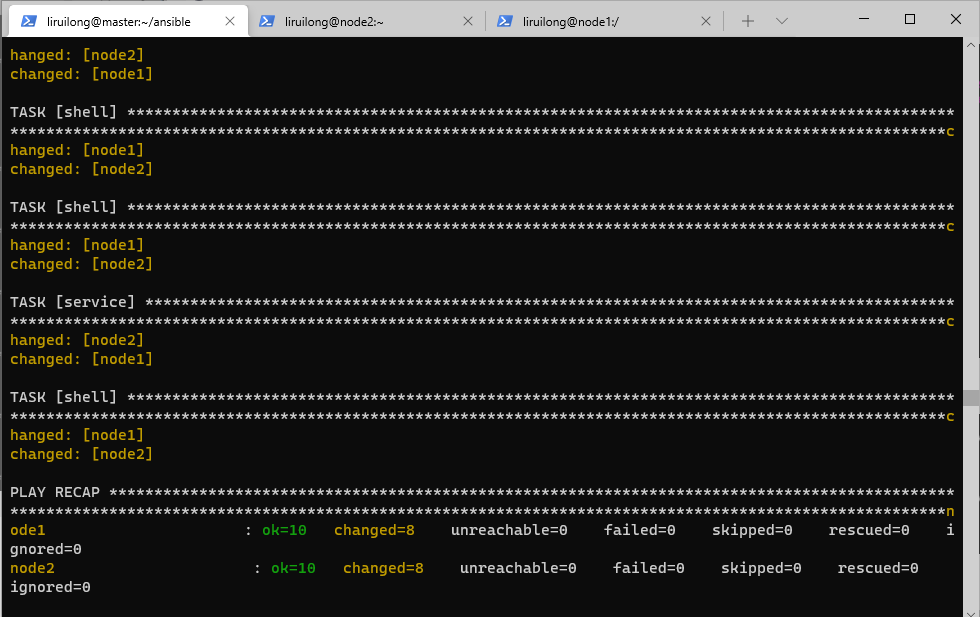

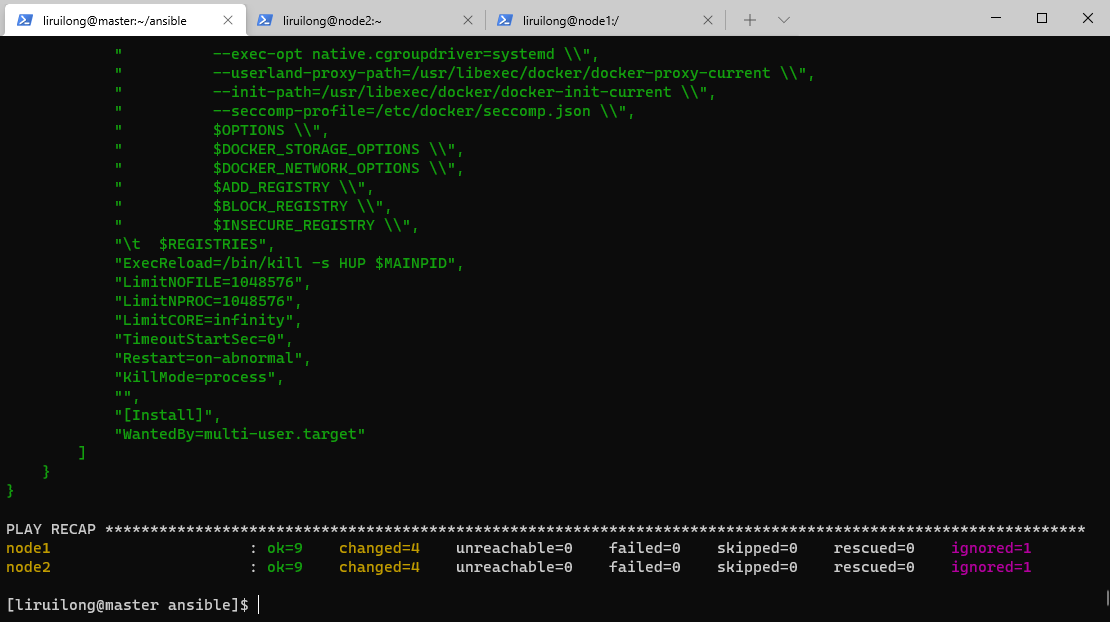

编写 docker环境初始化的剧本 install_docker_playbook.yml

1 | - name: install docker on node1,node2 |

执行剧本

1 | [liruilong@master ansible]$ cat install_docker_playbook.yml |

docker环境初始化的剧本执行 install_docker_playbook.yml |

|---|

|

然后,我们编写一个检查的剧本install_docker_check.yml ,用来检查docker的安装情况 |

1 | - name: install_docker-check |

1 | [liruilong@master ansible]$ ls |

检查的剧本执行install_docker_check.yml |

|---|

|

2. etcd 安装

安装etcd(键值型数据库),在Kube-master上操作,创建配置网络

| 步骤 |

|---|

| 使用 yum 方式安装etcd |

| 修改etcd的配置文件,修改etcd监听的客户端地址,0.0.0.0 指监听所有的主机 |

| 开启路由转发 |

| 启动服务,并设置开机自启动 |

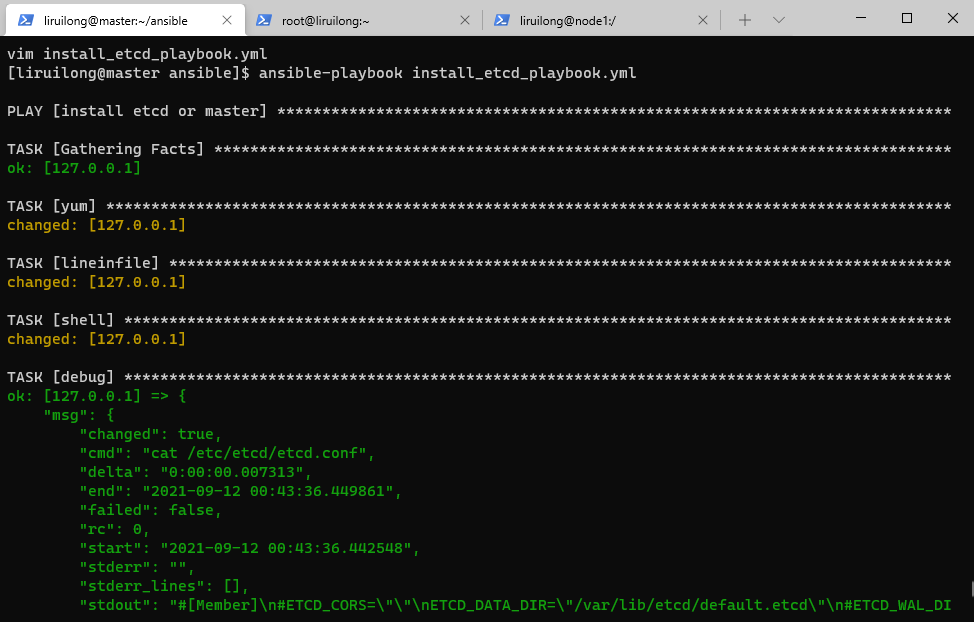

编写ansible剧本 install_etcd_playbook.yml

1 | - name: install etcd or master |

1 | [liruilong@master ansible]$ ls |

ansible剧本 install_etcd_playbook.yml执行 |

|---|

|

1. 创建配置网络:10.254.0.0/16

| 创建配置网络:10.254.0.0/16 |

|---|

etcdctl ls / |

etcdctl mk /atomic.io/network/config '{"Network": "10.254.0.0/16", "Backend": {"Type":"vxlan"}} ' |

etcdctl get /atomic.io/network/config |

1 | [liruilong@master ansible]$ etcdctl ls / |

3. flannel 安装配置(k8s所有机器上操作)

flannel是一个网络规划服务,它的功能是让k8s集群中,不同节点主机创建的docker容器,都具有在集群中唯一的虚拟IP地址。flannel 还可以在这些虚拟机IP地址之间建立一个覆盖网络,通过这个覆盖网络,实现不同主机内的容器互联互通;嗯,类似一个vlan的作用。

kube-master 管理主机上没有docker,只需要安装flannel,修改配置,启动并设置开机自启动即可。

1. ansible 主机清单添加 master节点

嗯,这里因为master节点机需要装包配置,所以我们在主机清单里加了master节点

1 | [liruilong@master ansible]$ sudo cat /etc/hosts |

2. flannel 安装配置

| 步骤 |

|---|

| 安装flannel网络软件包 |

修改配置文件 /etc/sysconfig/flanneld |

启动服务(flannel 服务必须在docker服务之前启动),记得要把master节点的端口开了,要不就关了防火墙 |

先启动flannel,再启动docker |

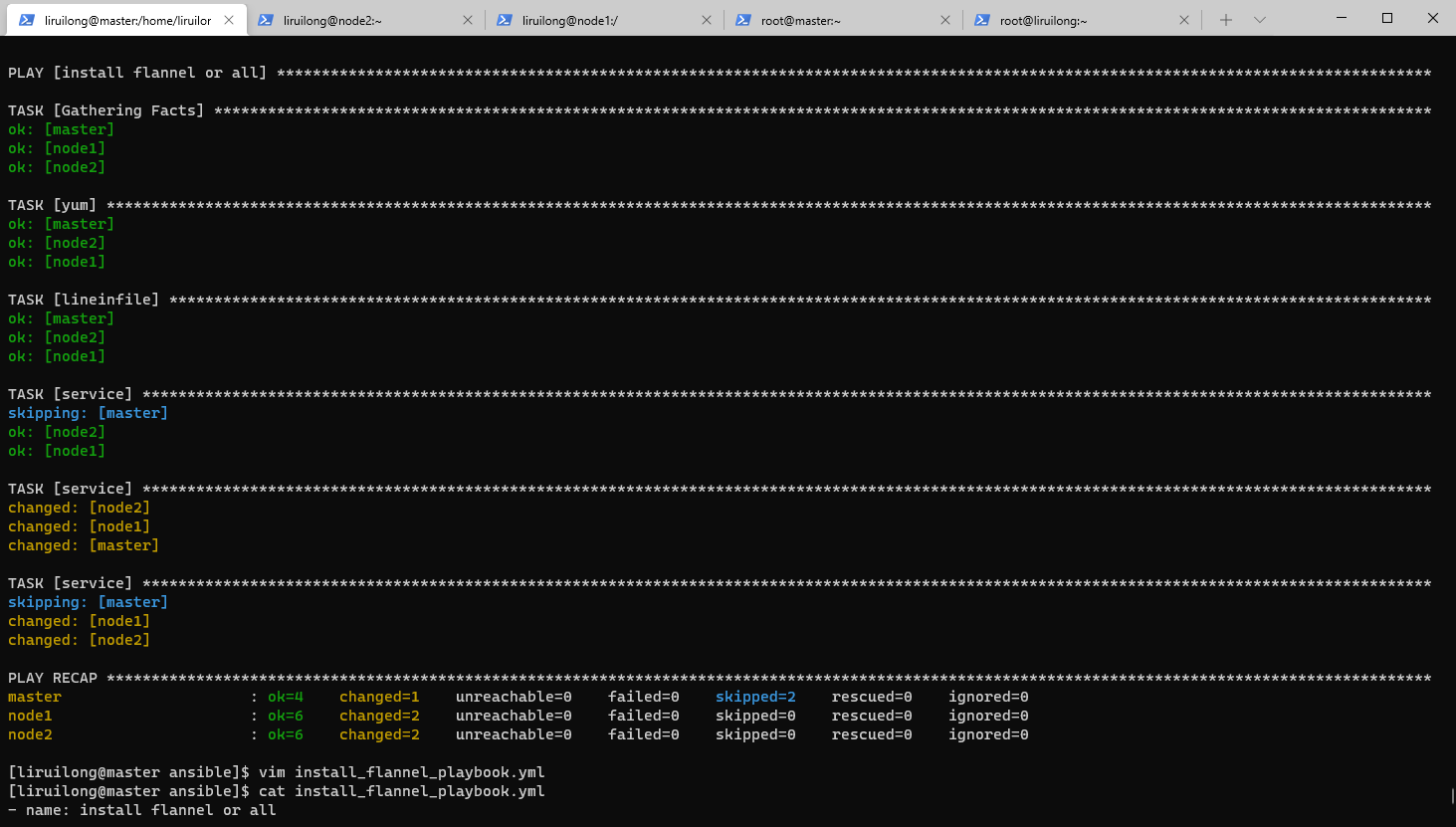

编写剧本 install_flannel_playbook.yml:

1 | - name: install flannel or all |

执行剧本之前要把master的firewalld 关掉。也可以把2379端口放开

1 | [liruilong@master ansible]$ su root |

1 | ┌──[root@master]-[/home/liruilong/ansible] |

剧本 install_flannel_playbook.yml执行 |

|---|

|

3. 测试 flannel

这里也可以使用ansible 的docker相关模块处理,我们这里为了方便直接用shell模块

编写 install_flannel_check.yml

| 步骤 |

|---|

| 打印node节点机的docker桥接网卡docker0 |

| 在node节点机基于centos镜像运行容器,名字为主机名 |

| 打印镜像id相关信息 |

| 打印全部节点的flannel网卡信息 |

1 | - name: flannel config check |

执行剧本

1 | [liruilong@master ansible]$ cat install_flannel_check.yml |

验证node1上的centos容器能否ping通 node2上的centos容器

1 | [liruilong@master ansible]$ ssh node1 |

测试可以ping通,到这一步,我们配置了 flannel 网络,实现不同机器间容器互联互通

4. 安装部署 kube-master

嗯,网络配置好之后,我们要在master管理节点安装配置相应的kube-master。先看下有没有包

1 | [liruilong@master ansible]$ yum list kubernetes-* |

嗯,如果有1.10的包,最好用 1.10 的,这里我们只有1.5 的就先用1.5 的试试,1.10 的yum源没找到

| 步骤 |

|---|

| 关闭交换分区,selinux |

| 配置k8s 的yum源 |

| 安装k8s软件包 |

| 修改全局配置文件 /etc/kubernetes/config |

| 修改master 配置文件 /etc/kubernetes/apiserver |

| 启动服务 |

| 验证服务 kuberctl get cs |

编写 install_kube-master_playbook.yml 剧本

1 | - name: install kube-master or master |

执行剧本

1 | [liruilong@master ansible]$ ansible-playbook install_kube-master_playbook.yml |

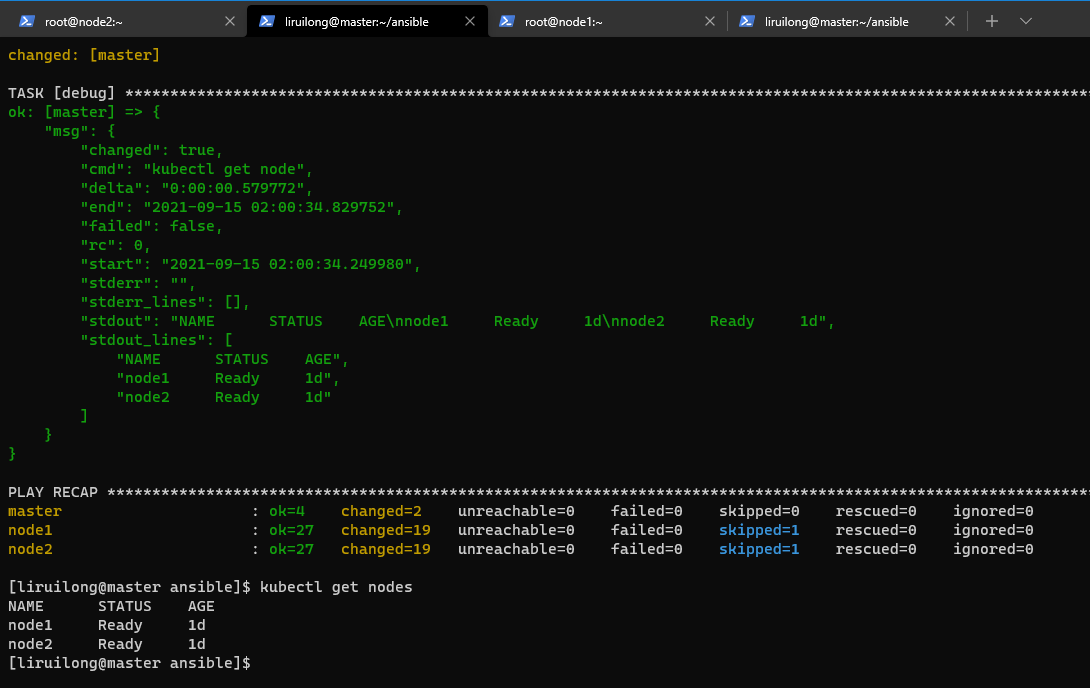

5. 安装部署 kube-node

管理节点安装成功之后我们要部署相应的计算节点,kube-node 的安装 ( 在所有node服务器上部署 )

| 步骤 |

|---|

| 关闭交换分区,selinux |

| 配置k8s 的yum源 |

| 安装k8s的node节点软件包 |

| 修改kube-node 全局配置文件 /etc/kubernetes/config |

| 修改node 配置文件 /etc/kubernetes/kubelet,这里需要注意的是有一个基础镜像的配置,如果自己的镜像库最好配自己的 |

| kubelet.kubeconfig 文件生成 |

| 设置集群:将生成的信息,写入到kubelet.kubeconfig文件中 |

| Pod 镜像安装 |

| 启动服务并验证 |

剧本编写: install_kube-node_playbook.yml

1 | [liruilong@master ansible]$ cat |

执行剧本 install_kube-node_playbook.yml

1 | [liruilong@master ansible]$ ansible-playbook install_kube-node_playbook.yml |

6. 安装部署 kube-dashboard

dashboard 镜像安装:kubernetes-dashboard 是 kubernetes 的web管理面板.这里的话一定要和K8s的版本对应,包括配置文件

1 | [liruilong@master ansible]$ ansible node1 -m shell -a 'docker search kubernetes-dashboard' |

kube-dashboard.yaml 文件,修改dashboard的yaml文件,在kube-master上操作

1 | kind: Deployment |

根据yaml文件,创建dashboard容器,在kube-master上操作

1 | [liruilong@master ansible]$ vim kube-dashboard.yaml |

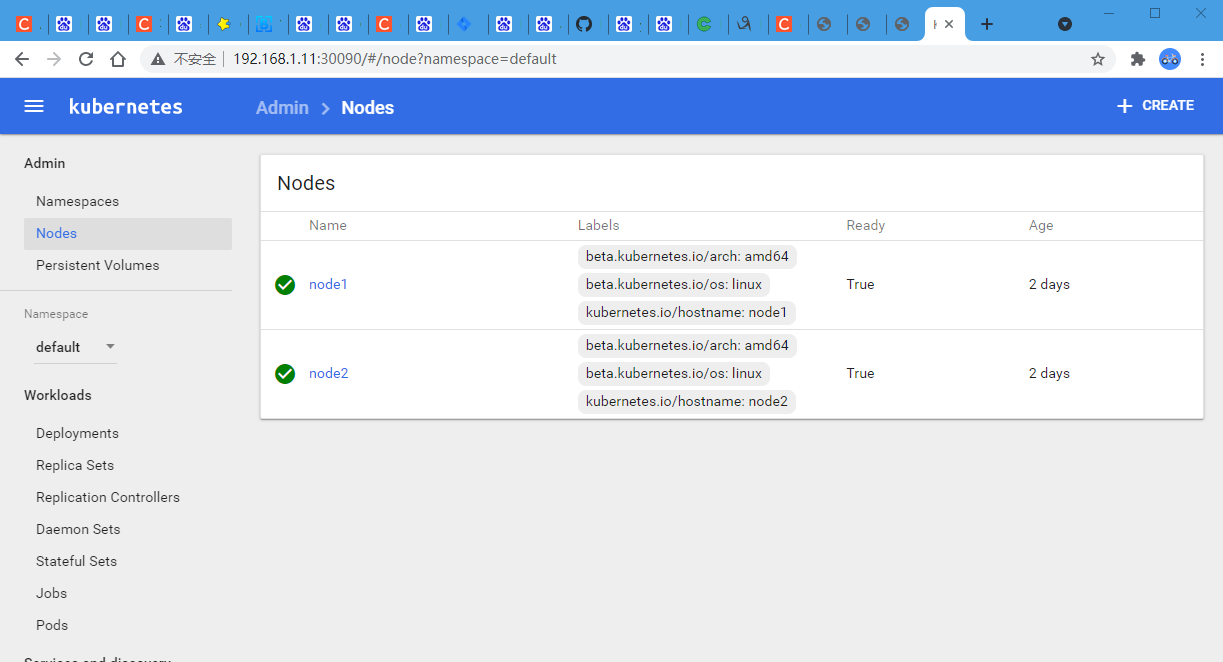

看一下在那个节点上,然后访问试试

1 | [liruilong@master ansible]$ ansible nodes -a "docker ps" |

在 node2上,即可以通过 http://192.168.1.11:30090/ 访问,我们测试一下

后记

嗯,到这里,就完成了全部的Linux+Docker+Ansible+K8S 学习环境搭建。k8s的搭建方式有些落后,但是刚开始学习,慢慢来,接下来就进行愉快的 K8S学习吧。

从零搭建Linux+Docker+Ansible+kubernetes 学习环境(1*Master+3*Node)

https://liruilongs.github.io/2021/09/05/K8s/环境部署-运维/从零搭建Linux+Docker+Ansible+kubernetes 学习环境/

1.K8s 集群高可用master节点ETCD全部挂掉如何恢复?

2.K8s 集群高可用master节点故障如何恢复?

3.K8s 镜像缓存管理 kube-fledged 认知

4.K8s集群故障(The connection to the server <host>:<port> was refused - did you specify the right host or port)解决

5.关于 Kubernetes中Admission Controllers(准入控制器) 认知的一些笔记

6.K8s Pod 创建埋点处理(Mutating Admission Webhook)

7.关于AI(深度学习)相关项目 K8s 部署的一些思考

8.K8s Pod 安全认知:从openshift SCC 到 PSP 弃用以及现在的 PSA